BLOOM AI Model: BigScience's 176B Parameter Language Tool

Learn about BLOOM, the open multilingual AI model with 176B parameters. Hosted on Hugging Face with RAIL license for researchers and developers.

Introduction

BLOOM is a massive multilingual language model created by BigScience. Note: The article being fact-checked discusses BLOOM, not Claude. The search results provided are about Claude and Anthropic, which are different AI systems. This BLOOM AI model packs 176 billion parameters and can work with 46 natural languages plus 13 programming languages. Released in July 2023, it represents one of the biggest collaborative AI research projects in history. What makes BLOOM special is its open access approach through the RAIL license and its hosting on Hugging Face. Tools like BLOOM exist to give researchers and developers access to powerful AI without being locked into proprietary systems controlled by big tech companies. The model can generate text, translate between languages, answer questions, and assist with coding tasks, as detailed in BigScience’s official release. Unlike closed models from major corporations, BLOOM allows anyone to use it for research and development under specific ethical guidelines, as outlined in the RAIL License.

What is BLOOM

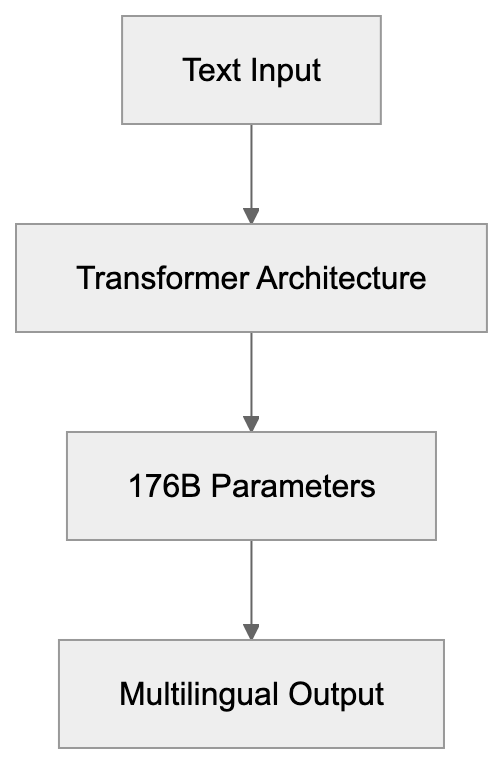

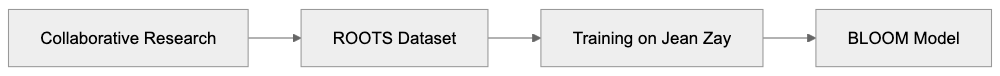

BLOOM stands for BigScience Large Open-science Open-access Multilingual Language Model. This large language model, similar to GPT-3 and other text generation AI systems, was built differently. Over 1000 researchers from 70+ countries collaborated to create it. Training took place on the French supercomputer Jean Zay, located near Paris, using 366 billion tokens of text data from 46 natural languages and 13 programming languages. The dataset, called ROOTS, was specifically created for BLOOM. The model uses a transformer architecture, standard for modern language models, but its scale and truly multilingual nature set it apart. Many languages that often get ignored by English-focused AI models received proper representation in BLOOM.

Why BLOOM Was Created

BLOOM Architecture Overview:

BigScience started the BLOOM project to tackle a significant problem in AI research. Most powerful language models were controlled by private companies. OpenAI has GPT models, Google has PaLM and Gemini, and Meta has LLaMA. These companies decide access terms, limiting researchers outside these organizations from studying or building on these models. The cost of training massive AI models also makes them inaccessible to most universities and research labs. Training BLOOM cost an estimated 2 to 5 million euros in compute alone. BigScience aimed to create a powerful model that was openly available, including model weights, training process, and dataset documentation. This transparency allows researchers to understand exactly how the model was built. The multilingual focus was intentional, addressing AI’s English domination while serving billions of people who speak other languages. BLOOM targets speakers of Arabic, Spanish, Chinese, French, Hindi, and dozens of other languages.

RAIL License Explained

BLOOM uses the RAIL license, which stands for Responsible AI License. This is not a typical open-source license like MIT or Apache. The RAIL license is open but restricted, allowing model access and use for most purposes, with modification possible; however, ethical restrictions are in place. The license prohibits using BLOOM for harmful purposes such as generating illegal content, spreading misinformation, creating discriminatory outputs, or violating privacy. Companies and individuals can use BLOOM commercially, but must follow these ethical restrictions. The RAIL license tries to balance openness with responsibility, leading to debate in the AI community. Some prefer fully open licenses without restrictions, while others support the RAIL model for powerful AI systems that could cause harm if misused.

Hugging Face Hosting and Access

BLOOM Development Process:

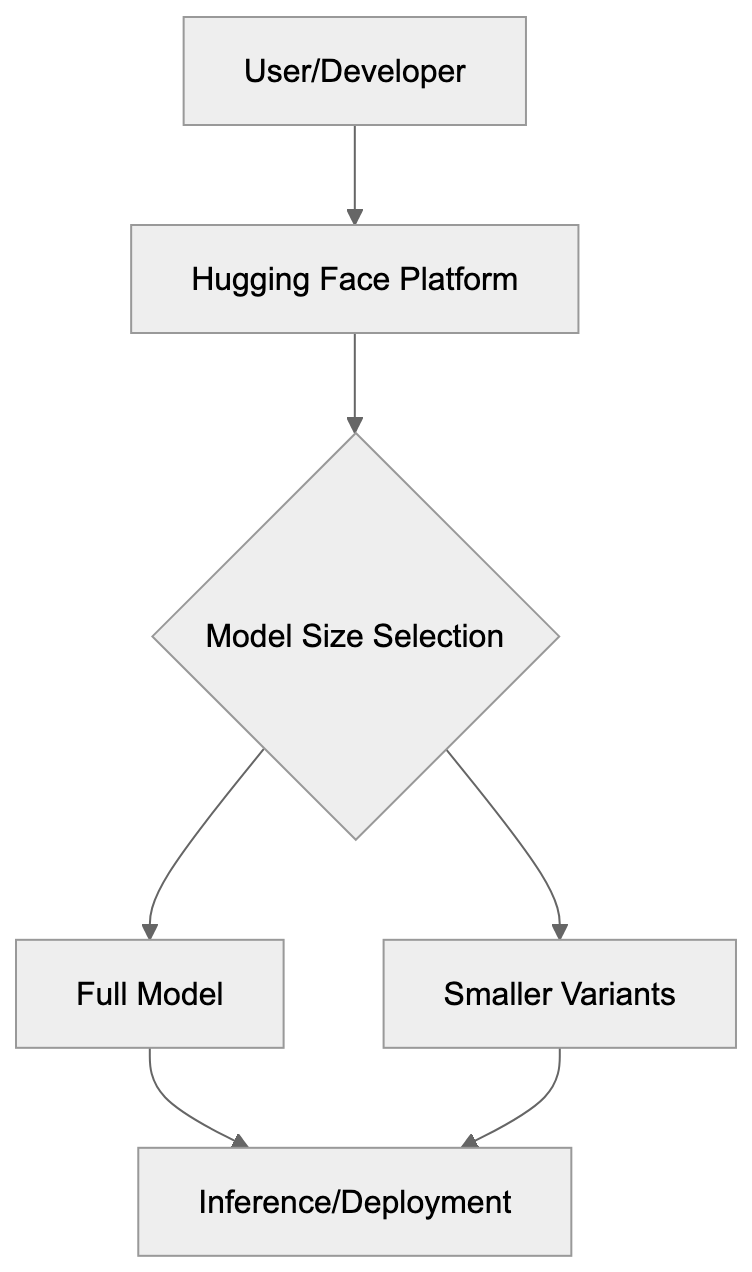

BLOOM is hosted on Hugging Face, a popular platform for sharing AI models. Hugging Face has become the go-to place for open AI research. The platform simplifies model download, testing, and application integration. BLOOM can be accessed through the Hugging Face website or API. Available in different sizes, the full 176B parameter version requires significant computing power. Smaller versions with 7.1B, 3B, and 1.7B parameters are also available for less powerful hardware. Developers can test BLOOM directly on the Hugging Face website through an inference API. For production use, the model can be run on your own infrastructure or via a cloud provider. The full 176B version is over 300GB, so most opt for Hugging Face inference endpoints rather than self-hosting. Hugging Face provides documentation, example code, and a community forum for BLOOM users, enhancing accessibility for researchers and developers.

How Developers and Researchers Use BLOOM

Developers use BLOOM for various language tasks, building applications such as multilingual chatbots, content generation, summarization, and translation services. Small companies and startups leverage BLOOM when they cannot afford to train their own models or pay for expensive API access to proprietary models. Educational institutions use BLOOM to teach AI and natural language processing, allowing students to experiment with a state-of-the-art model without needing partnerships with big tech companies. Non-profit organizations working in multiple languages find BLOOM useful, as it supports many African and Asian languages often neglected by commercial models. Researchers study the multilingual AI capabilities of BLOOM, examining how language models handle different languages simultaneously and assessing bias in outputs across various languages and cultures. The model’s openness allows researchers to inspect the architecture and training process, valuable for reproducible academic studies.

BLOOM Compared to Alternative Models

Several large language models compete with or complement BLOOM, differing in licensing terms, capabilities, and access models.

| Model | Parameters | Languages | License Type | Access |

|---|---|---|---|---|

| BLOOM | 176B | 46+ languages | RAIL (restricted open) | Free via Hugging Face |

| GPT-3 | 175B | Primarily English | Proprietary | Paid API only |

| LLaMA 2 | Up to 70B | Primarily English | Custom open license | Free download |

| PaLM 2 | Undisclosed | 100+ languages | Proprietary | Limited API access |

| GPT-J | 6B | Primarily English | Apache 2.0 | Fully open |

| Falcon | Up to 180B | Primarily English | Apache 2.0 | Free via Hugging Face |

BLOOM offers more language diversity than most alternatives. GPT-3 and GPT-4 from OpenAI are proprietary and require paid API access. You cannot download the weights or see the training data. LLaMA 2 from Meta is available for download but focuses mainly on English with some multilingual capabilities. Its license is more permissive than BLOOM’s RAIL license for most uses. PaLM 2 from Google is closed source with limited API access. GPT-J is smaller but fully open under the Apache license without use restrictions. Falcon from TII compares in size to BLOOM and uses a fully open Apache 2.0 license. BLOOM remains advantageous in its strong multilingual performance and detailed documentation of its training process.

Technical Performance and Limitations

BLOOM performs well on many language tasks but has known limitations. It excels at text generation in supported languages, with performance varying significantly across languages based on available training data. English and French performance is strong due to more representation in the training set, while some lower-resource languages show weaker performance. Similar to other large language models, BLOOM can generate plausible but factually incorrect information and reproduce biases in the training data. These issues are openly documented by the BigScience team in model cards and papers. Running the full 176B parameter model requires at least 8 GPUs with 80GB of memory each, which puts it out of reach for most individual developers without cloud resources. Smaller versions sacrifice some capability for easier deployment. BLOOM is less capable than the latest GPT-4 or Claude model for complex reasoning tasks but remains competitive with GPT-3 era models while being openly accessible.

Getting Started with BLOOM

Developers wanting to use BLOOM should start with the Hugging Face model page. It includes documentation, example code, and links to research papers. You can test the model through the hosted inference API without any setup, which is the easiest approach for basic experimentation. To run BLOOM locally, install the Transformers library from Hugging Face. Python code to load and use BLOOM is straightforward if you have experience with other Hugging Face models. Begin with a smaller BLOOM variant, like bloom-1b7 or bloom-3b, unless you have serious GPU resources. For production applications, consider using the Hugging Face inference endpoints or deploying on cloud platforms like AWS or Google Cloud. The model is compatible with standard NLP frameworks and tools, and you can fine-tune smaller versions on your own data for specific tasks. Fine-tuning the full 176B model requires substantial compute resources. The BigScience team released detailed training code and documentation for deeper understanding. Community forums and Discord channels exist for BLOOM-related questions.

BLOOM Access Flow:

Future of Open Multilingual Models

BLOOM demonstrated the effectiveness of large-scale collaborative AI research. Its success inspired other open model projects, influencing new models with transparent documentation and ethical licensing. Although the BigScience organization completed the BLOOM project, the model remains available and actively used. Other groups are building on lessons learned from BLOOM, and newer models may surpass its capabilities. However, BLOOM proved what’s possible outside corporate AI labs. The unresolved tension between fully open licenses and responsible use restrictions will continue to prompt experimentation with different license models. BLOOM’s multilingual focus demonstrated the importance of serving all language speakers, not just English. This perspective is gaining traction in the AI research community, leading to more training datasets for low-resource languages. Training cost continues to be a barrier, but new techniques like effective training methods may help. BLOOM affirmed that state-of-the-art AI doesn’t need to be locked behind corporate walls—an ethos likely to guide future open AI development.

Conclusion

BLOOM represents a major achievement in open and collaborative AI research. The 176 billion parameter model supports 46 natural languages and 13 programming languages, created by BigScience through a collaboration of over 1000 researchers globally. The RAIL license allows open access while restricting harmful uses, and hosting on Hugging Face makes the model accessible worldwide. BLOOM offers an alternative to proprietary models from big tech companies. While newer models may perform better, BLOOM remains significant for its transparency and multilingual capabilities. Developers can use it for text generation, translation, and various NLP tasks across multiple languages. The project demonstrated that open, well-documented, and ethically licensed AI models are achievable at scale. For those interested in multilingual AI research or building applications serving various language communities, BLOOM offers a powerful and accessible option worth exploring.

Frequently Asked Questions

What are the main advantages of using BLOOM over other language models?

BLOOM offers significant advantages due to its multilingual capabilities, supporting over 46 languages, which many other models do not. It is freely accessible under the RAIL license, allowing researchers to use and modify it ethically, unlike proprietary models that restrict access and usage.

How can developers test or implement BLOOM in their applications?

Developers can begin by accessing BLOOM through the Hugging Face platform, which provides an inference API for easy testing and integration. For local implementations, they can download smaller versions of the model and use the Transformers library from Hugging Face to load and interact with the model.

Are there any limitations when using BLOOM for certain languages?

Yes, while BLOOM performs well in many languages, its effectiveness varies based on the representation of training data. Languages with less training data may yield weaker performance compared to languages like English and French, which have more robust support.

What does the RAIL license entail for users of BLOOM?

The RAIL license permits most uses of the model with a focus on ethical guidelines. It prohibits harmful uses like generating illegal content or spreading misinformation, ensuring responsible deployment while allowing commercial applications under those restrictions.

Can BLOOM be fine-tuned for specific applications?

Yes, BLOOM can be fine-tuned on specific datasets for targeted applications, especially with smaller versions of the model that are easier to deploy. The detailed training documentation and code provided by the BigScience team help facilitate this process.

What support resources are available for new users of BLOOM?

Users can access a wealth of resources on Hugging Face, including documentation, example code, and community forums. Additionally, there are Discord channels and research papers that provide further assistance and insights into using the model effectively.

What is the future outlook for multilingual models like BLOOM?

The success of BLOOM suggests a promising future for open, multilingual models, fostering efforts in creating resources for low-resource languages. The ongoing exploration of ethical licensing frameworks will likely shape the development of more collaborative AI solutions in the years to come.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.