GPT-NeoX: EleutherAI's Open Source LLM Explained

Complete guide to GPT-NeoX and EleutherAI's open-source language models. Learn about Apache 2.0 licensing, The Pile dataset, and alternatives.

Introduction

GPT-NeoX is an open-source large language model developed by EleutherAI. This non-profit research group builds AI models that anyone can use, modify, and study without restrictions. Unlike proprietary models from big tech companies, GPT-NeoX comes with Apache 2.0 licensing, allowing commercial use without fees. EleutherAI created GPT-NeoX to democratize AI research and provide the community with tools that match corporate capabilities, as detailed in their GitHub repository. The model was trained on The Pile, a massive 825GB dataset specifically designed for language model training. This open-source AI effort represents a milestone in community-driven development, showcasing how open collaboration can rival corporate efforts.

What is GPT-NeoX and EleutherAI

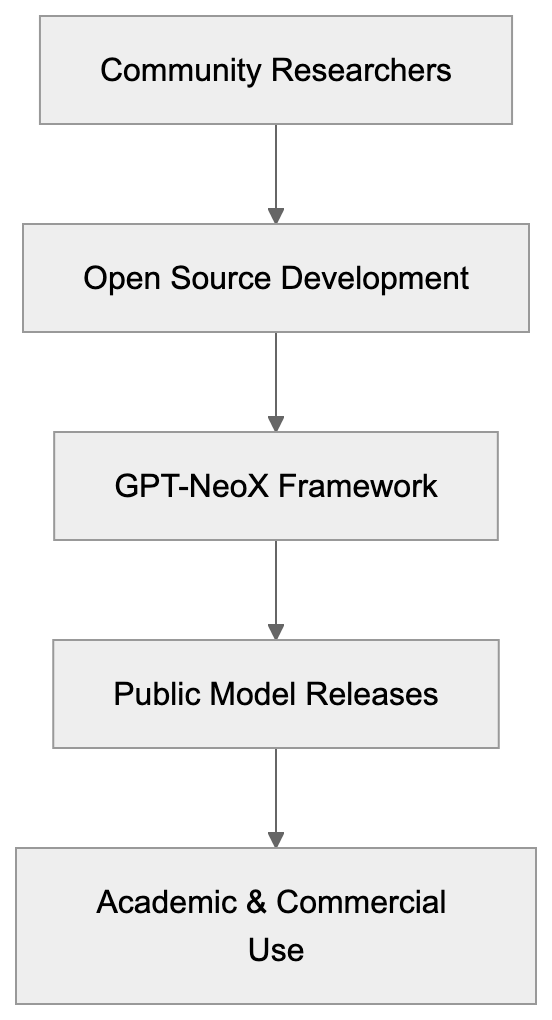

GPT-NeoX Development Approach:

EleutherAI began as a grassroots collective of AI researchers and engineers aiming to recreate GPT-3 capabilities in an open-source context. Operating as a non-profit research lab, EleutherAI focuses on making AI accessible to all. GPT-NeoX is their flagship model architecture, designed for scalable large language model (LLM) training. The framework supports models with billions of parameters and operates on distributed GPU clusters. EleutherAI released several model checkpoints, including a 20 billion parameter version. The codebase, written in Python, is built on PyTorch and DeepSpeed, optimizing the LLM training process. Under the Apache 2.0 license, commercial use is permitted without sharing modifications, distinguishing it from models like GPT-3 that require API access and impose usage restrictions.

Why GPT-NeoX Exists and Its Purpose

Before EleutherAI, powerful language models were often locked behind corporate walls, inaccessible for independent study. GPT-NeoX was created to address this lack of transparency. EleutherAI’s project provides academics, startups, and developers with access to cutting-edge language models. By leveraging open source GPT research, EleutherAI demonstrates how collaborative efforts can match corporate resources, reducing risks to innovation and fairness. The project enables users to train custom models from scratch for specific domains. Additionally, GPT-NeoX serves as an educational resource for understanding large-scale model training, widely used in universities and research labs. EleutherAI continues to develop GPT-NeoX to support increasingly larger models as hardware improves.

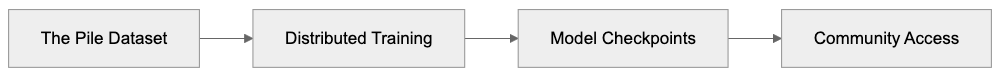

GPT-NeoX Training Pipeline:

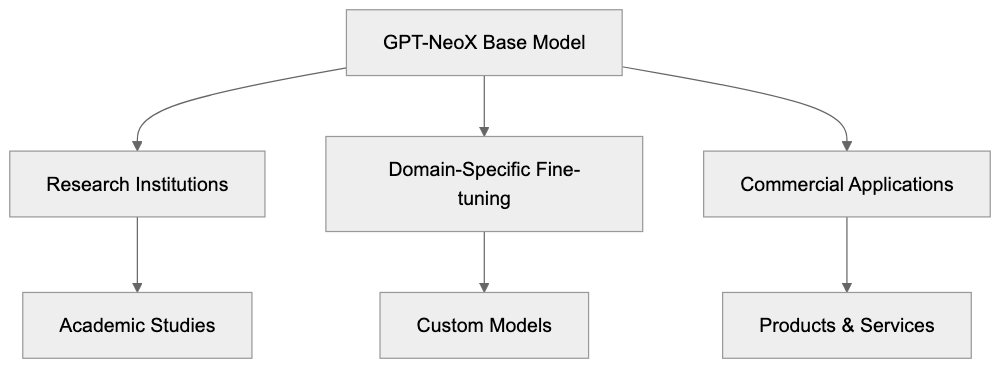

How Users and Organizations Use GPT-NeoX

Research institutions utilize GPT-NeoX to study language model behavior without corporate limitations. Universities develop domain-specific models for fields like law, medicine, and science. Startups build products on GPT-NeoX, avoiding the cost of proprietary API access. Developers fine-tune the base models for tasks such as chatbots, content generation, and code completion. The open-source AI nature allows security experts to audit models for biases. Organizations in regulated industries trust GPT-NeoX for on-premises data handling. Academic works often cite GPT-NeoX as a benchmark for new architecture comparisons. The community actively contributes improvements, with some users running smaller versions on consumer hardware. Documentation aids newcomers in understanding distributed training concepts, and the EleutherAI Discord community supports knowledge sharing.

The Pile Dataset and Training Process

The Pile is an 825GB dataset created to train large language models, assembled from 22 sources like books, websites, scientific papers, and code repositories. It includes 300 billion tokens, with components like PubMed Central, GitHub, and Wikipedia. Specialized datasets such as ArXiv papers and Stack Exchange discussions are also part of The Pile. EleutherAI’s transparency in data sourcing allows researchers to understand the model influences. GPT-NeoX models were trained on The Pile using distributed GPU training. The 20 billion parameter model required substantial computational resources, utilizing mixed precision for efficient computation without compromising quality. EleutherAI published training metrics for replicability, with The Pile freely available for training new models, contrasting with proprietary models’ secrecy. This openness facilitates research on data composition’s impact on model capabilities.

GPT-NeoX Compared to Alternative Models

Several open-source language models compete with GPT-NeoX:

| Model | Developer | Parameters | License | Training Data | Commercial Use |

|---|---|---|---|---|---|

| GPT-NeoX | EleutherAI | 20B | Apache 2.0 | The Pile (825GB) | Yes, unrestricted |

| BLOOM | BigScience | 176B | RAIL License | ROOTS (1.6TB) | Yes, with restrictions |

| LLaMA | Meta | 7B-65B | Custom | Undisclosed | Research only initially |

| Falcon | TII | 40B-180B | Apache 2.0 | RefinedWeb | Yes, unrestricted |

| Pythia | EleutherAI | 70M-12B | Apache 2.0 | The Pile | Yes, unrestricted |

GPT-NeoX Use Cases:

GPT-NeoX finds a balance between model size and open-source accessibility. BLOOM is larger but has usage restrictions. Initially, LLaMA prohibited commercial use, a stance revised with LLaMA 2. Falcon offers newer models trained on more recent data than GPT-NeoX. Pythia represents EleutherAI’s exploration of model scaling. GPT-NeoX is popular due to its permissive license and comprehensive documentation, serving as a baseline for research.

Technical Implementation and Requirements

GPT-NeoX utilizes the GPT architecture, modified for effective large-scale training. The framework employs model parallelism across multiple GPUs, supporting pipeline and tensor parallelism for scaling. DeepSpeed integration enhances memory use and speeds up training. Substantial GPU memory is necessary for the full 20B parameter model, though smaller versions can run on single high-end consumer GPUs. Rotary positional embeddings improve handling of longer sequences. Training configuration files detail hyperparameters like learning rate, batch size, and model dimensions. Pretrained tokenizers optimized for The Pile are available, facilitating fine-tuning on custom datasets with moderate resources. Gradient checkpointing reduces memory use during training. Installation is required but well-documented, with active project development for improvements.

Community Impact and Future Development

GPT-NeoX has proven that community-driven initiatives can compete with corporate AI labs, inspiring projects like BLOOM and Stable Diffusion. EleutherAI’s success in open source GPT demonstrated the feasibility of cost-effective AI research. Many papers cite GPT-NeoX for language model scaling studies, creating a transparent AI development model. EleutherAI continues to refine GPT-NeoX, releasing new models like Pythia, focused on interpretability. Regular updates on research and releases ensure ongoing engagement. Contributions from the community enhance the project, and while commercial usage is hard to quantify, many startups rely on GPT-NeoX. EleutherAI collaborates with academic institutions, planning future models as resources allow. The organization advocates for open AI research and transparency.

Conclusion

GPT-NeoX marks a significant achievement in open-source AI development. EleutherAI has shown that community-driven research can rival corporate model capabilities. The Apache 2.0 license eliminates barriers for researchers and developers, and training on The Pile dataset ensures transparency. Though newer models surpass GPT-NeoX, it remains a crucial reference. The project set standards for open AI research, influencing future developments. GPT-NeoX remains relevant for applications needing full access and control over models. EleutherAI’s efforts underscore the importance of accessible and transparent AI technology.

Frequently Asked Questions

What are the main advantages of using GPT-NeoX?

GPT-NeoX offers open-source access, allowing researchers to modify and study the model without restrictions. It also supports commercial use under the Apache 2.0 license, making it accessible for startups and developers looking for cost-effective AI solutions.

How can I access and use GPT-NeoX?

You can access GPT-NeoX through its GitHub repository, which provides the code and documentation for installation and usage. Users can download pre-trained models and follow the guidelines to fine-tune them for specific tasks or datasets.

What types of projects are best suited for GPT-NeoX?

Projects involving natural language processing, such as chatbots, content generation, or domain-specific academic work, are ideal candidates for GPT-NeoX. Its ability to be customized and fine-tuned allows users to enhance performance in various fields, including law and medicine.

What hardware do I need to run GPT-NeoX?

The full 20B parameter model requires substantial GPU memory, typically needing high-end GPUs for efficient operation. However, smaller versions of GPT-NeoX can run on consumer-grade hardware, making it accessible for a broad range of users.

How does the training process of GPT-NeoX work?

GPT-NeoX is trained using a distributed GPU training process on The Pile dataset, which consists of diverse sources to ensure robust language comprehension. The training incorporates techniques like mixed precision for efficiency and gradient checkpointing to reduce memory usage.

Can I contribute to the development of GPT-NeoX?

Yes, the EleutherAI community encourages contributions to improve GPT-NeoX. You can participate by providing feedback, reporting issues, or submitting enhancements through the GitHub repository.

What is The Pile, and why is it important for GPT-NeoX?

The Pile is a comprehensive 825GB dataset designed to train large language models like GPT-NeoX. Its diverse sources ensure that the model develops a well-rounded understanding of language, which is crucial for its performance in real-world applications.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.