Meta's Llama: Open-Source AI Models and Their Impact

Explore Meta's Llama open-source AI models, versions, licensing, and ecosystem. Compare Llama with proprietary alternatives.

What is Meta Llama

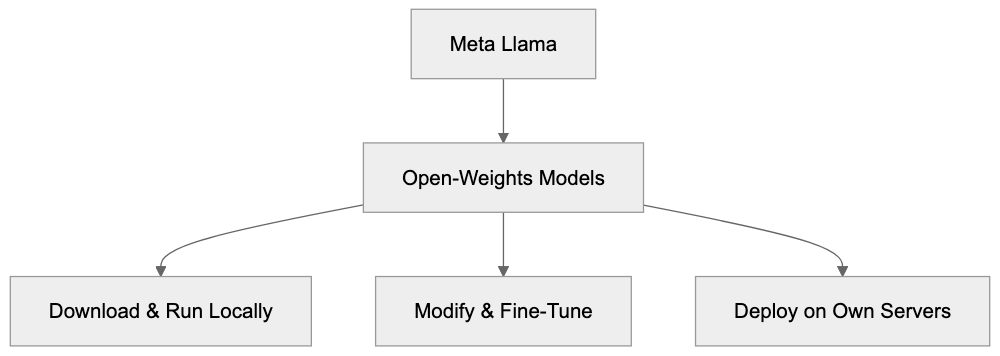

Meta Llama is a family of large language models developed by Meta. The name LLaMA stands for Large Language Model Meta AI. These AI models are designed to compete with proprietary options like GPT-4, GPT-5, and Claude. The key difference lies in their accessibility—anyone can download, run, or even modify these AI models locally.

Meta released the first Llama model in February 2023. Since then, various versions like Llama 2, Llama 3, Llama 4.1, and most recently, Llama 4 have been launched. Each iteration brings performance enhancements and new capabilities. Models range from those with 1 billion parameters to the massive Llama 4 with 405 billion parameters.

Meta Llama Model Family Overview:

Open-source AI models like Meta Llama give developers and businesses control over data and infrastructure. They avoid sending sensitive information to third-party APIs, enabling companies—especially those in regulated industries—to fine-tune AI models for specific tasks and deploy them on their own servers.

Why Meta Created Llama

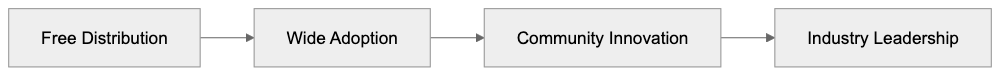

Meta’s strategy is unique compared to competitors like OpenAI and Anthropic, who keep their AI models behind paid APIs. Meta offers Llama for free to download because it aligns with their business model and industry position.

Instead of selling AI access directly, Meta benefits from widespread AI adoption. By offering open-source AI models, they accelerate AI technology and development across the industry. Such openness attracts researchers and developers worldwide, prompting faster improvements and fostering a reputation as a leading AI authority.

Open-source AI models ensure Meta stays competitive. They provide alternatives to proprietary models like GPT-4, which dominated the market upon release. Businesses get the liberty of choosing a model that doesn’t lock them into a single vendor, prompting all providers to continuously improve offerings.

Llama Versions and Evolution

Meta’s Open-Source Strategy:

Meta has released several versions of the Llama language models, each iteration showcasing specific improvements and capabilities.

- Llama 1 launched in February 2023, available initially only to researchers. It included models ranging from 7B to 65B parameters.

- Llama 2 arrived in July 2023 with a commercial license and included 7B, 13B, and 70B parameters models. This version noted improvements, especially in reasoning tasks.

- Llama 3 launched in April 2024 with increased performance in multilingual capabilities, coding, and math solving. It expanded the context window to 8,192 tokens and trained on over 15 trillion tokens.

- Llama 3.1 featured a massive 405B parameter model with a context window of up to 128,000 tokens, directly competing with GPT-4.

- Llama 3.2 in September 2024 introduced smaller models and vision capabilities.

- Llama 4 released in April 2025 improved the context window to up to 10M tokens using the innovative Mixture-of-Experts (MoE) architecture.

How Businesses Use Llama

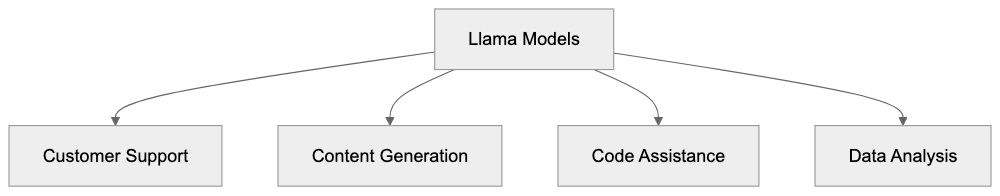

Businesses implement Llama in several ways, customized per their objectives and resources.

- Customer Support: Llama models power support chatbots, configured on specific product documentation to handle FAQs and troubleshoot tasks efficiently.

- Content Generation: Marketing teams leverage Llama to draft blogs, social media content, and product descriptions, ensuring consistency when fine-tuned to brand voice.

- Software Development: Development teams employ Llama in code completion and bug fixes, reporting a 20-30% productivity gain with AI assistance.

- Data Analysis: Llama helps analyze documents, generating summaries and addressing complex queries rapidly, thus speeding up decision-making.

- Knowledge Management: Llama models enhance search systems, transforming document queries into relevant answers with ease.

Llama Licensing and Access

Meta employs a unique licensing approach for Llama. Although labeled open-source, it uses a custom license rather than common ones like MIT or Apache. Llama’s license permits free commercial use, except when the user base exceeds 700 million monthly active users, necessitating a special license.

Downloading Llama models is easy. Meta, Hugging Face, and cloud providers like AWS, Google Cloud, and Azure offer them. Managed hosting options exist, allowing use without the need to handle infrastructure.

Common Business Applications:

Modification and fine-tuning are permitted, allowing users to tailor the models per their data or merge with other AI models. However, Llama outputs can’t be used to train other language models, ensuring Meta maintains a competitive edge.

Comparing Llama 4 to Alternatives (Updated January 2025)

Llama 4 stands out against other AI models depending on performance, cost, control, or ease of use. The AI scene is rapidly evolving—with features like the Mixture-of-Experts architecture and pricing competition from new entrants like DeepSeek.

Model Comparison Overview

| Model | Provider | Access Type | Context Length | Key Strength | Typical Cost (per 1M tokens) |

|---|---|---|---|---|---|

| Llama 4 Maverick | Meta | Open-weights | 1M tokens | Multimodal, 400B total params (17B active), 128 experts | Free to download; hosting costs vary |

| Llama 4 Scout | Meta | Open-weights | 10M tokens | Industry-leading context, fits single H100, 109B total (17B active) | Free to download; hosting costs vary |

| GPT-5 | OpenAI | API only | 128K tokens | Strongest reasoning, reduced hallucinations, hybrid modes | $1.25 input / $10 output |

| Claude Sonnet 4.5 | Anthropic | API only | 1M tokens (beta) | Best coding, agentic tasks, instruction following | $3 input / $15 output |

| Claude Opus 4.5 | Anthropic | API only | 200K tokens | Most intelligent, complex enterprise workflows | $5 input / $25 output |

| Gemini 2.5 Pro | API only | 1M tokens | Massive context, native multimodal, strong thinking abilities | $1.25 input / $10 output | |

| Mistral Large 2 | Mistral AI | API and open | 128K tokens | European alternative, strong multilingual, cost-effective | $2 input / $6 output |

| DeepSeek V3 | DeepSeek | API and open (MIT) | 128K tokens | Exceptional value, MIT license, MoE architecture | $0.27 input / $1.68 output |

Performance by Task

Performance varies significantly by task type. GPT-5 excels in complex reasoning and creative writing. Claude Sonnet 4.5 dominates software engineering tasks, while Llama 4 Maverick competes effectively on benchmarks and offers flexibility in open-weight deployment.

Llama 4 Scout shows strong performance while fitting on single NVIDIA H100 GPUs with improved efficiency, making it appealing for various organizations.

Context Window Considerations

Context window sizes have grown dramatically.

- Llama 4 Scout: 10M tokens, ideal for parsing large codebases.

- Llama 4 Maverick: 1M tokens, aligning with closed models.

- Claude Sonnet 4.5: Up to 1M tokens for special users.

- Gemini 2.5 Pro: 1M tokens, with expansion plans.

- GPT-5: 128K tokens.

- DeepSeek V3: 128K tokens.

For comprehensive document or project analysis, Llama 4 Scout’s 10M token context leads the industry.

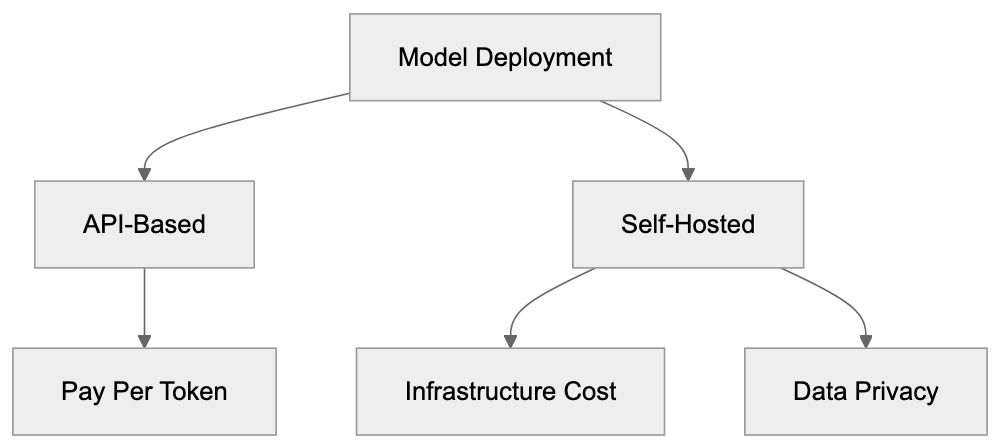

Cost Structure Analysis

Cost structures vary greatly between open and proprietary models:

- API-Based Models: Charge per processed tokens, potentially costing thousands monthly.

- Open-Weights Models: Require initial infrastructure investment but no per-use fees.

- DeepSeek: V3 offers significant cost advantages, comparable performance, and is MIT-licensed.

Batch processing and prompt caching offer substantial savings, influencing total ownership costs.

Latency and Reliability Trade-offs

Running Llama 4 requires server management, GPU allocation, model updates, and maintenance but offers data privacy, increased latency, customization, and predictable costs.

API providers offer dedicated support infrastructure, but self-hosting provides complete data control, lower latency, and fine-tuning opportunities.

Customization Capabilities

Llama models boast customization as a major advantage. Organizations can:

- Train on proprietary datasets

- Adjust safety boundaries for use cases

- Distill knowledge from larger models

- Deploy specialized variants

Licensing Considerations

- Llama 4 License: Allows free commercial use but necessitates special licensing for entities with over 700 million users.

- DeepSeek MIT License: Offers complete freedom for use, modification, and distribution.

- Proprietary APIs: Bound by provider terms and lacking local deployment.

Recommendations by Use Case

- For Startups: DeepSeek V3 offers unparalleled value.

- For Software Engineering: Claude Sonnet 4.5 excels.

- For Large Context Needs: Llama 4 Scout offers unmatched capabilities.

- For Maximum Flexibility: Llama 4 Maverick provides a robust, customizable solution.

- For General Use: GPT-5 and Gemini 2.5 Pro fulfill broad application needs.

Deployment Options Comparison:

The Bottom Line

AI models in 2025 offer more capable options at lower prices. Llama 4 represents a step forward for open-weights AI models, achieving frontier performance economically. DeepSeek’s competitive pricing urges industry-wide reassessment.

Your optimal choice relies on specific constraints—sovereignty, infrastructure, budget, required context length, and complexity. A hybrid approach using APIs for development and self-hosting for production may strike the best balance.

The Llama Ecosystem

Llama models have inspired a vibrant ecosystem around them, offering tools, fine-tuned versions, and extensive resources.

- Hugging Face: Hosts varied versions.

- Managed Hosting: Available through Anyscale, Replicate, and Together AI.

- Developer Tools: LangChain, LlamaIndex, and Ollama simplify usage.

- Quantization Tools: GGML and GPTQ democratize advanced AI access.

- Research and Benchmarks: Ongoing evaluations help developers pick suitable models.

Privacy and Data Considerations

Using Llama locally keeps data under user control—a stark contrast to API-based models. Self-hosted Llama avoids data transmission over the internet, respecting privacy and regulatory requirements inherent in sectors like healthcare and finance.

While self-hosting presents security challenges, it remains a viable alternative for those prioritizing data privacy. Fine-tuning requires careful data management, ensuring proprietary data doesn’t inadvertently leak.

A hybrid approach—initial API model prototyping transitioning to self-hosted Llama—balances speed, privacy, and long-term economy.

Getting Started with Llama

Begin your Llama journey by considering your technical prowess and goals.

- For Early Experimentation: Use hosted services like Hugging Face Spaces.

- For Developers: Employ Python libraries like Transformers and LlamaIndex.

- For Larger Models: Utilize cloud GPUs from services like RunPod or Vast.ai.

- For Fine-Tuning: Consider services like Predibase or Monster API.

For production, incorporate monitoring, caching, and fallback strategies, ensuring robust model deployment.

Frequently Asked Questions

What are the key differences between Llama models and proprietary options like GPT-4?

The primary difference is accessibility; Llama models are open-source and can be downloaded and modified freely, while proprietary models like GPT-4 are accessible only through paid APIs. This enables users to maintain control over their data and infrastructure without the need for third-party services.

How can businesses integrate Llama models into their workflows?

Businesses can use Llama models for various applications, including customer support via chatbots, content generation for marketing, code assistance in software development, and document analysis for data insights. Each implementation can be tailored to align with specific business objectives and enhance operational efficiency.

What are the licensing terms for using Llama models?

While Llama models are labeled as open-source, they operate under a custom license that allows free commercial use for most users. However, organizations exceeding 700 million active users must obtain a special license, making it essential for large-scale businesses to understand these conditions before deployment.

Can I customize Llama models for my specific data needs?

Yes, users can modify and fine-tune Llama models on their datasets to better suit their specific applications. Customization can include training on proprietary data, adjusting safety parameters, and merging models, but outputs cannot be utilized to train new language models.

What technical requirements are needed to run Llama models locally?

Running Llama models locally typically requires access to compatible GPUs and the necessary computing infrastructure, which can be obtained through cloud services. Additionally, users should have some familiarity with programming, particularly in Python, to effectively use model libraries like Transformers and LlamaIndex.

How does using Llama impact data privacy?

Using Llama models locally enhances data privacy since sensitive information is not transmitted over the internet. This is especially critical in regulated industries such as healthcare and finance, where data control and compliance with privacy regulations are paramount.

Is it necessary to self-host Llama for maximum performance?

Self-hosting can provide benefits such as lower latency, full control over data, and customization flexibility, making it essential for organizations with specific performance or data privacy needs. However, for initial prototyping and smaller-scale uses, managed hosting via services like Hugging Face may be a more convenient option.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.