Mistral AI: European Open-Weight Models Leading Innovation

Discover Mistral AI's open-weight models like Mistral 7B and Mixtral 8x7B. Learn about European AI leadership and what makes these models efficient.

What is Mistral AI and Why It Matters

Mistral AI is a pioneering French AI startup building open-weight large language models with a focus on AI efficiency and performance. Launched in 2023, it quickly became a prominent name in European AI. Open-weight models are AI systems with publicly released model weights, allowing developers and researchers to use them freely, fostering rapid adoption as developers integrate AI models into products, creating a strong ecosystem. This approach prioritizes efficiency; Mistral AI models compete with larger systems using fewer computational resources. This reduces running costs, essential for businesses reliant on AI models. Within months of launching, Mistral AI achieved a multi-billion euro valuation, bolstered by significant funding, including a €1.3 billion investment from ASML, making it the largest shareholder in the French AI start-up. Their primary offerings include Mistral 7B and Mixtral 8x7B, designed for developers seeking powerful AI without extensive infrastructure costs, with Mistral 7B outperforming LLaMA 2 13B on all benchmarks tested.

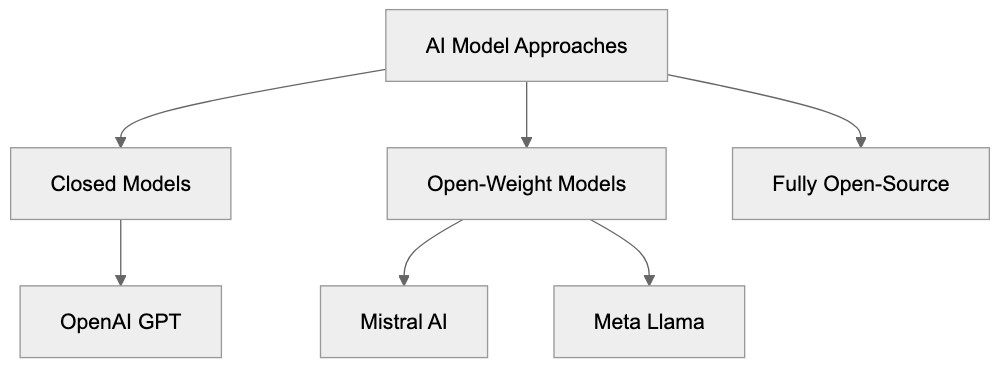

Understanding Open-Weight AI Models

Mistral AI’s Position in the AI Landscape:

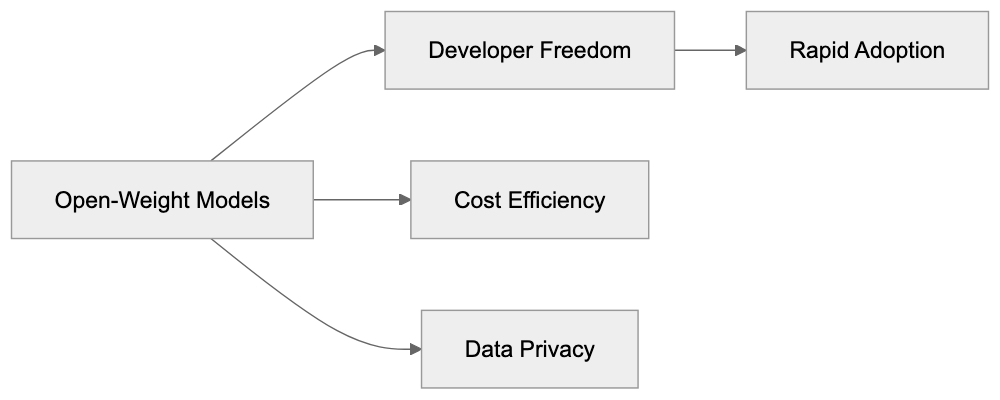

Open-weight models, including those from Mistral AI, offer a middle ground between entirely open-source AI and closed systems. While open-weight models share trained parameters, they might not disclose training code or datasets. Despite this, they provide developers substantial freedom, such as running models on personal hardware, ensuring data privacy and control. This is particularly appealing for businesses focused on security. Training a model like the Mistral 7B is costly, but releasing weights allows others to bypass these expenses. This fosters rapid adoption as developers integrate AI models into products, creating a strong ecosystem. Mistral AI and others, like Meta with Llama models, drive this trend, whereas OpenAI maintains a closed approach with models like GPT-4.

Mistral 7B: Efficiency Meets Performance

Released in September 2023, Mistral 7B marked Mistral AI’s entry into the AI models space. With 7 billion parameters, it efficiently rivals models with 13 billion parameters. Utilizing grouped query attention, it enhances inference speed and memory efficiency, translating to reduced operational costs. Mistral 7B can process context windows of 8192 tokens, benefiting tasks like coding and reasoning. It supports multiple languages, excelling in English, and allows developers to fine-tune for specific needs, creating tailored models.

Open-Weight Model Benefits:

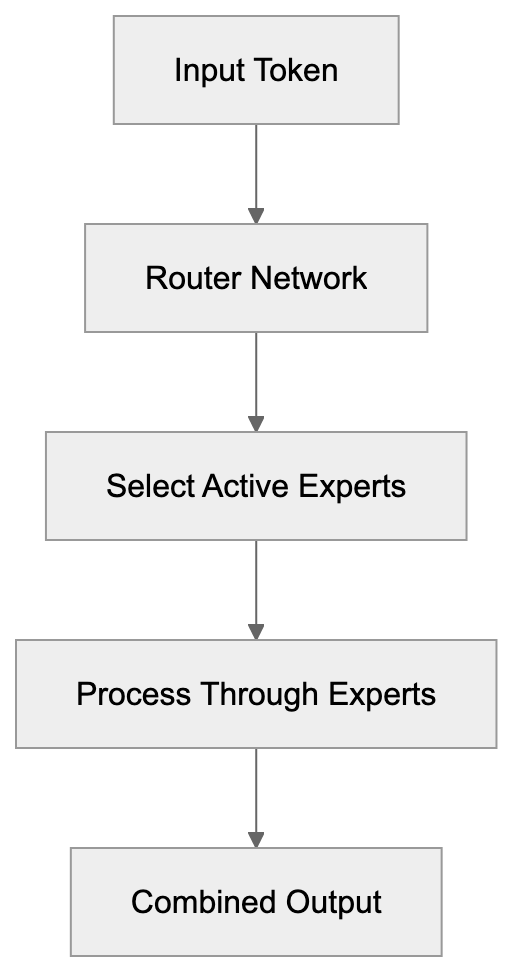

Mixtral 8x7B: The Mixture of Experts Approach

Mixtral 8x7B, launched in December 2023, showcases Mistral AI’s innovative mixture of experts architecture. This design involves eight smaller expert networks, activating selectively based on input. With 47 billion total parameters and only 13 billion active, it excels in performance and speed. It outperforms Llama 2 70B in benchmarks, managing larger context windows of 32000 tokens. A multilingual powerhouse, Mixtral is optimized for code generation and diverse language tasks through its specialized expert networks.

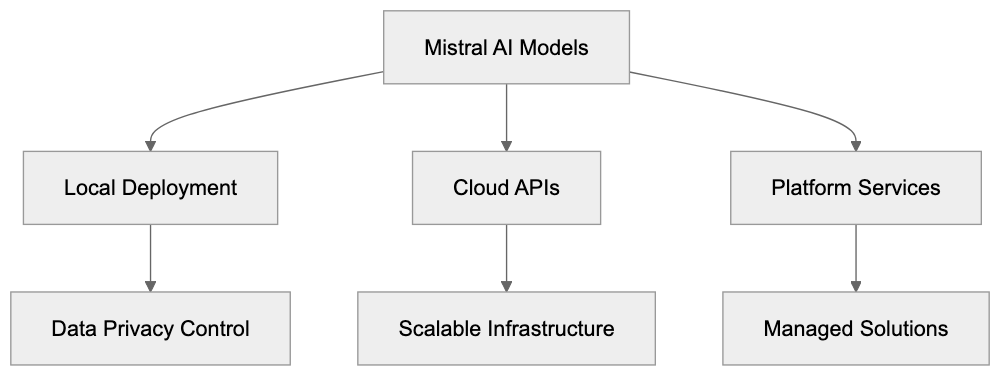

How Businesses and Developers Use Mistral AI

Mixture of Experts Architecture:

Mistral AI models, such as Mistral 7B and Mixtral 8x7B, integrate into applications flexibly. Developers can run models locally, securing data privacy, or leverage cloud-hosted APIs. Startups utilize these models for enhancing AI features without necessitating in-house training. Applications range from chatbots in customer service to automated tutoring in education. Content marketers generate text drafts using these models, and legal firms use them for contract analysis. The models’ effectiveness allows usage on less powerful hardware, making AI accessible for small businesses seeking AI efficiency.

Funding and Growth Trajectory

In June 2023, Mistral AI raised approximately 385 million euros, a record for a European AI startup, valuing the company at 240 million euros. By December 2023, they raised an additional 385 million euros, elevating the valuation to around 2 billion euros, with investors like Andreessen Horowitz and Lightspeed Venture Partners. Mistral AI’s swift growth exemplifies European AI momentum, directly competing with American AI labs. Their ambition is to develop sovereign AI capabilities for Europe, providing an alternative to American or Asian providers while emphasizing data sovereignty and compliance.

Comparing Mistral AI to Alternative Models

A comparison of Mistral AI models against alternatives highlights their unique strengths:

| Model | Parameters | Context Length | Training Organization | Release Date | Key Strength |

|---|---|---|---|---|---|

| Mistral 7B | 7B | 8192 tokens | Mistral AI | Sept 2023 | Effectiveness and speed |

| Mixtral 8x7B | 47B (13B active) | 32000 tokens | Mistral AI | Dec 2023 | Sparse expert architecture |

| Llama 2 7B | 7B | 4096 tokens | Meta | July 2023 | Wide adoption and ecosystem |

| Llama 2 70B | 70B | 4096 tokens | Meta | July 2023 | Strong general performance |

| Falcon 40B | 40B | 2048 tokens | TII | May 2023 | Trained on quality web data |

| MPT 7B | 7B | 8192 tokens | MosaicML | May 2023 | Commercial-friendly license |

Mistral AI Integration Options:

Mistral models achieve strong performance with fewer resources. Llama 2 benefits from broader adoption and extensive ecosystem, while Falcon models emphasize quality data. MPT models initially offered commercial licenses, but Mistral AI’s performance remains competitive.

Technical Architecture and Innovations

Mistral AI models are built on transformer architecture, incorporating optimizations like grouped query attention to reduce memory usage. Techniques such as sliding window attention enhance long-context handling. The unique mixture of experts design in Mixtral requires specialized training processes, with the router network directing inputs efficiently, enhancing inference performance.

Licensing and Usage Terms

Mistral AI models, Mistral 7B and Mixtral 8x7B, are released under the permissive Apache 2.0 license, allowing commercial use and modification without royalties. Unlike Meta’s Llama 2, Mistral AI imposes no usage constraints, aiming for broad adoption. This framework avoids vendor lock-in, offering data control and infrastructure freedom.

Performance Benchmarks and Capabilities

Mistral 7B scores impressively on benchmarks like MMLU and HumanEval, demonstrating competitive capabilities. Mixtral 8x7B excels in multilingual performance, achieving high ratings in French, aligned with Mistral AI’s French origins. These benchmarks affirm the models’ suitability for diverse applications.

Integration Options and Ecosystem

Developers can access Mistral AI models through platforms like Hugging Face, utilizing the transformers library for integration. Cloud platforms offer managed Mistral models, and the company’s service, La Plateforme, provides commercial offerings. Local deployment is possible for privacy-sensitive cases, supported by tools like Ollama for smaller hardware needs.

European AI Leadership and Strategy

As a leader in European AI, Mistral AI aims to compete globally, benefiting from Europe’s rich AI research talent. The company provides an attractive alternative for European researchers, aligning with Europe’s regulatory framework like GDPR, enhancing transparency and compliance for businesses.

Future Developments and Model Roadmap

Mistral AI plans ongoing model development, balancing open-weight and closed model releases. Future enhancements might include larger expert models, extended context lengths, and the addition of multimodal capabilities incorporating vision processing. Improvements in quantization and fine-tuning support for specific use cases will likely enhance model efficiency and adoption.

Summary

Mistral AI builds efficient open-weight language models from Europe. Mistral 7B and Mixtral 8x7B offer competitive performance at lower resource requirements, released under the Apache 2.0 license allowing commercial use without royalties. The models can run locally for data privacy or through cloud APIs, making them accessible for startups and enterprises alike.

Frequently Asked Questions

What are the main benefits of using Mistral AI's models?

Mistral AI's models provide efficiency and performance at a lower cost compared to larger models. Their open-weight architecture allows developers to access and run these models locally, ensuring data privacy and control, which is vital for businesses.

How does Mistral AI compare to other AI models?

Mistral AI models like Mistral 7B and Mixtral 8x7B demonstrate strong performance with fewer parameters. They outperform competitors such as Llama 2 and Mixtral can utilize its unique mixture of experts architecture to enhance efficiency and output quality.

What is the significance of the open-weight model approach?

Open-weight models encourage widespread adoption by allowing developers to freely use public model weights without the need for extensive infrastructure. This fosters a collaborative ecosystem where innovation can flourish while keeping costs manageable.

Can I fine-tune Mistral AI models for specific applications?

Yes, developers can fine-tune Mistral AI models like Mistral 7B for specific tasks to enhance their relevance and performance. The flexibility in deployment allows for custom adaptations depending on the user’s needs.

What licensing terms apply to Mistral AI models?

Mistral AI models are released under the permissive Apache 2.0 license, allowing for commercial use and modification without royalties. This license structure is designed to promote wide adoption and prevent vendor lock-in.

How can businesses integrate Mistral AI models into their operations?

Businesses can integrate Mistral AI models through cloud-hosted APIs or by deploying them locally for improved data privacy. Startups and other organizations use these models for a range of purposes, including chatbots, content creation, and data analysis.

What future developments can we expect from Mistral AI?

Mistral AI plans to enhance its models by possibly releasing larger expert models, increasing context lengths, and adding multimodal capabilities. Ongoing improvements in quantization and fine-tuning support are anticipated to broaden the models' applicability and efficiency.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.