Microsoft Phi Small Language Models: Complete Guide

Learn about Microsoft's Phi small language models, their efficiency, Phi-3 variants, and how they enable on-device AI with MIT licensing.

What Are Small Language Models and Why Microsoft Built Phi

Small language models like Microsoft Phi are a novel approach to AI, differing from massive models such as GPT-4 or Claude. While large models boast billions or trillions of parameters, small language models like Phi-3 focus on task effectiveness. Microsoft Phi-3 showcases how these models deliver strong performance across various tasks without massive computing demands.

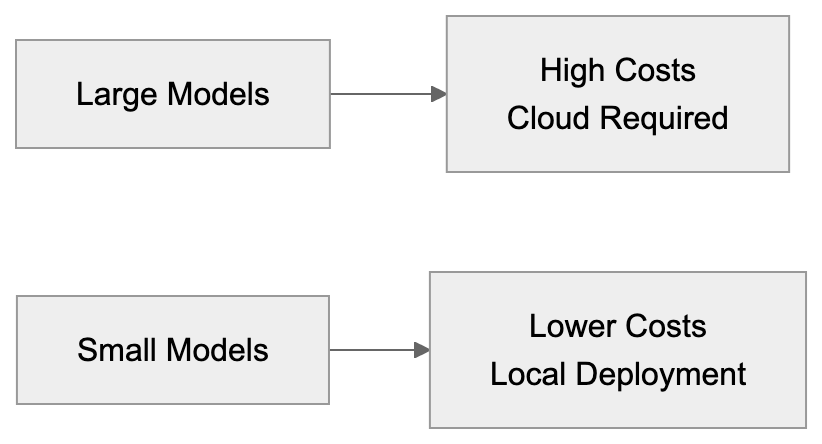

Microsoft developed Phi to address real-world challenges, including the high costs and energy consumption associated with large AI models. Large AI models require costly hardware and high energy consumption, which not every business or developer can justify. Many applications function well with smaller, more efficient AI models operable on standard computers or mobile devices. Phi models are available under an open-source MIT license, allowing developers to freely incorporate them into commercial projects without restrictions. This makes Phi appealing to small business owners and web developers aiming to integrate AI without hefty infrastructure expenses.

Understanding the Phi Model Family

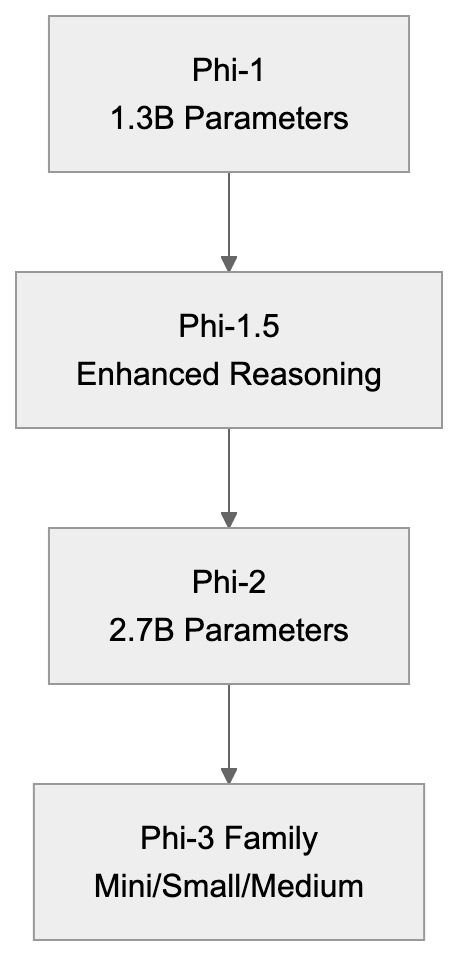

Microsoft released the Phi series in phases. The original Phi-1, launched in 2023 with 1.3 billion parameters, focused on code generation and basic reasoning tasks. Phi-1.5 enhanced common sense reasoning and language understanding. Phi-2 followed with 2.7 billion parameters, demonstrating the significance of data selection over sheer model size.

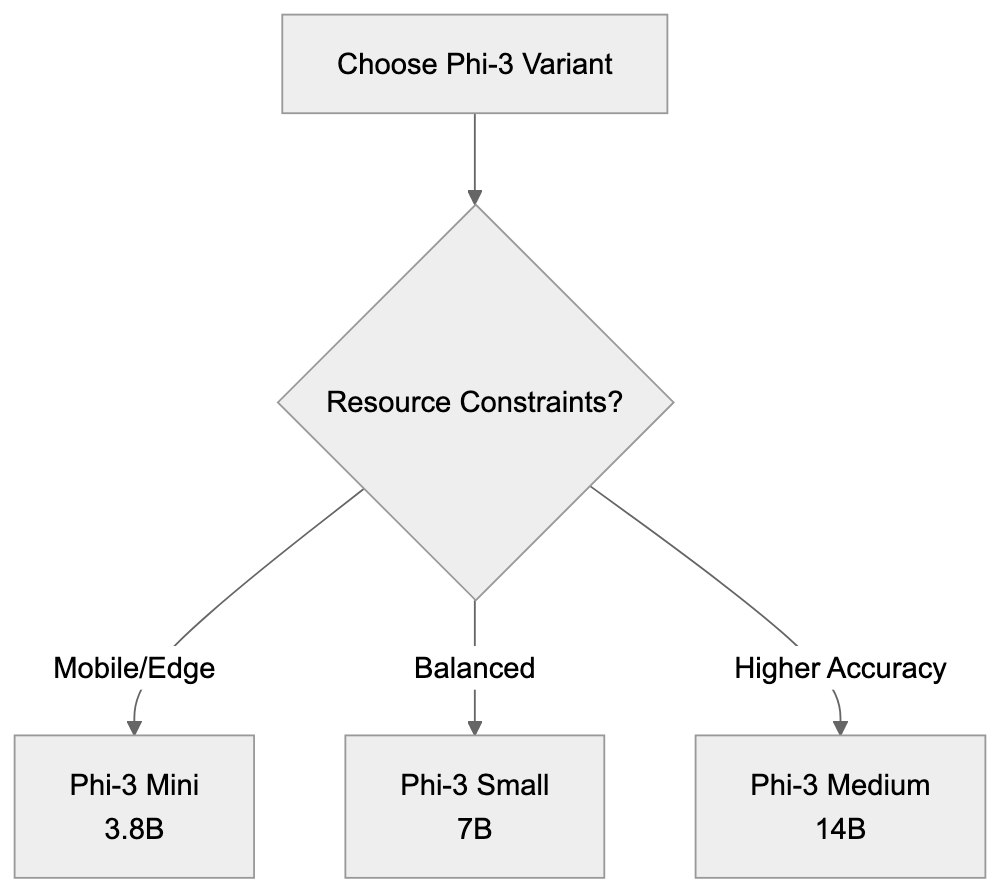

The Phi-3 family represents the latest generation, with three variants addressing different needs, including Phi-3 Mini, Phi-3 Small, and Phi-3 Medium. Phi-3 Mini, featuring 3.8 billion parameters, is designed for smartphones. Phi-3 Small, with 7 billion parameters, balances performance with effectiveness, while Phi-3 Medium, at 14 billion parameters, handles more demanding tasks. All versions embody the efficient AI models approach central to Phi.

Phi Model Family Evolution:

Phi models stand out due to their training methodology. Microsoft employed high-quality textbook-style data, reducing the need for vast, noisy datasets, leading to high benchmark performance relative to size. This ‘textbook quality training’ means the models learn from carefully curated examples, leading to high benchmark performance relative to size.

Why Small Language Models Matter for Businesses

Cost greatly influences business decisions. Running queries on large AI model APIs can be expensive, especially with thousands of daily requests. With small language models like Phi-3, businesses run AI locally, paying only for initial computing resources instead of ongoing per-query charges.

Latency is also crucial. On-device AI ensures rapid responses, as Phi models process data locally, contrasting with the slower, remote server requests. This speed advantage benefits customer-facing applications where immediacy matters, such as chatbots and interactive features.

Small vs Large Language Models Comparison:

Privacy is another consideration. On-device AI with Phi models allows sensitive data to remain within a company’s infrastructure, mitigating compliance issues with regulations like GDPR. The MIT licensing of Phi removes legal worries, granting businesses the freedom to use them without concerns over licensing fees or restrictions.

Technical Capabilities and Performance

Phi-3 Mini offers impressive performance for its size, rivaling models ten times larger in tasks like question answering, summarization, and code generation. It runs on devices with as little as 4GB of memory, making it suitable for edge computing.

Phi-3 Small strikes a balance between effectiveness and capability. With 7 billion parameters, it excels in complex reasoning tasks while requiring modest hardware. It’s ideal for developers needing accuracy without deploying large models.

Phi-3 Medium, the most capable in the family, rivals older large language models. Though requiring more resources, it remains efficient compared to larger models. SEO experts and content marketers use Medium for content generation and analysis.

All Phi-3 models support multiple languages, with the best performance in English. They also work with various input types, including text and basic image understanding in multimodal versions.

Comparison with Alternative Small Language Models

Here’s how Phi-3 compares to other small language models:

| Model | Parameters | License | Key Strength | Typical Use Case |

|---|---|---|---|---|

| Phi-3 Mini | 3.8B | MIT | Mobile deployment | On-device chatbots |

| Phi-3 Small | 7B | MIT | Balanced performance | Local coding assistants |

| Phi-3 Medium | 14B | MIT | Higher accuracy | Document processing |

| Mistral 7B | 7B | Apache 2.0 | Speed | API alternatives |

| Gemma 7B | 7B | Custom | Google combining | Cloud deployments |

| Llama 3 8B | 8B | Custom | Meta ecosystem | Research projects |

| Qwen 7B | 7B | Custom | Multilingual | International apps |

Mistral 7B and Phi-3 Small inhabit the same size category. While Mistral often excels in raw performance, Phi-3’s MIT license offers simplicity compared to Mistral’s Apache 2.0.

Phi-3 Model Selection by Use Case:

Google’s Gemma models suit Google Cloud service users but have restrictive licenses. Phi-3’s MIT license provides more flexibility. Meta’s Llama 3 8B, popular for research, requires approval for large-scale commercial use, a constraint absent in Phi-3. Qwen, from Alibaba, excels in multilingual tasks, making it ideal for Asian languages but less competitive for English-focused applications.

Real World Applications and Use Cases

Software developers embed Phi-3 models into desktop applications for offline AI features. Code editors use them for autocompletion and bug detection. The models run fast enough for real-time suggestions, independent of external APIs.

Small business owners deploy Phi-3 for customer service chatbots. Local servers reduce costs compared to per-message API fees, while keeping customer interactions private.

Web developers integrate Phi-3 into content management systems for automated metadata generation. The model suggests tags, descriptions, and categories, saving content teams time while maintaining quality.

Marketing professionals use Phi-3 Medium for content ideation and draft generation, keeping campaign plans secure within the network.

SEO experts utilize Phi models to analyze competitor content and identify keyword opportunities, processing web pages locally for quick insights.

Content marketers leverage Phi-3 for transforming content across formats, ensuring consistent messaging while adapting tone appropriately.

Getting Started with Microsoft Phi Models

Phi models are hosted on Hugging Face for easy access. Developers can download the model weights and utilize them with frameworks like PyTorch and ONNX Runtime. Documentation and example code aid in common tasks.

Hardware requirements vary. Phi-3 Mini runs on recent smartphones and basic laptops with 4-6GB RAM. Phi-3 Small requires 8-12GB RAM, and Phi-3 Medium needs 16-24GB, depending on quantization.

Quantization reduces model size and memory needs, converting weights from 16-bit to lower precision, speeding up inference while maintaining accuracy.

Developers can experiment with demo apps demonstrating chat interfaces, code completion, and document summarization, learning to customize Phi for specific needs.

Production deployment demands planning, including updates, performance monitoring, and fallback strategies for difficult queries. Testing with real user data is crucial before launch.

Limitations and Considerations

Small language models, including Phi-3, have limitations compared to larger counterparts. They struggle in specialized domains, lacking expert-level understanding in fields like medicine or law.

Context window size limits processing. Phi-3 can handle several thousand tokens but not entire books, necessitating chunking strategies for long documents.

Models may confidently generate incorrect information. Verify outputs for accuracy, especially in high-stakes situations.

Multilingual performance varies, with English faring best. Applications may require specialized models for non-English languages.

Fine-tuning enhances performance but requires data and expertise, presenting challenges for small businesses without technical teams.

Future Development and Updates

Microsoft continually improves the Phi series, releasing updates and adding capabilities. Following the official Microsoft AI blog and GitHub repositories keeps developers updated on new innovations.

The trend toward smaller models is growing. Expect increased competition in this space, with newer releases improving tradeoffs.

Multimodal capabilities in Phi are expanding, potentially adding audio and video understanding, facilitating richer multimedia applications.

Quantization techniques are advancing, making AI models even more effective, possibly enabling use on older devices or IoT hardware.

Community contributions enhance Phi’s ecosystem, sharing specialized versions for specific industries or tasks, driving collaborative development.

Conclusion

Microsoft Phi represents a notable shift in AI toward effectiveness and accessibility. The Phi-3 family proves that small language models provide practical value without substantial infrastructure needs. Phi-3 Mini, Small, and Medium offer performance and resource tradeoffs for varying business needs.

With MIT licensing, Phi appeals to businesses of all sizes, providing cost savings, privacy, and low latency compared to API solutions, as it can be deployed locally on devices. Developers, small business owners, and marketers find diverse applications for these efficient models.

Though Phi models have limits compared to larger systems, they excel at many common tasks. Understanding these factors helps choose the right tool for each situation. A growing ecosystem and continuous development promise enhanced capabilities in future releases. For those seeking AI integration without significant costs or complexity, Microsoft Phi remains a compelling option.

Frequently Asked Questions

What types of tasks can Phi models perform?

Phi models excel in various tasks such as question answering, summarization, code generation, and basic reasoning. Their capabilities make them ideal for applications like chatbots, desktop applications, and content generation.

How do I access and use Microsoft Phi models?

Phi models can be accessed on Hugging Face, where developers can download the model weights. They are compatible with frameworks like PyTorch and ONNX Runtime, and documentation with example code is available to assist in getting started.

Are there specific hardware requirements for running Phi models?

Yes, hardware requirements vary by model. Phi-3 Mini can run on devices with 4-6GB RAM, Phi-3 Small requires 8-12GB RAM, and Phi-3 Medium needs 16-24GB, depending on desired performance and quantization options.

What is the advantage of using small language models like Phi?

Small language models like Phi provide significant cost savings and privacy advantages, as they can run locally without ongoing API costs. They also offer faster response times due to on-device processing, making them suitable for real-time applications.

Can Phi models be fine-tuned for specific applications?

Yes, Phi models can be fine-tuned to enhance performance for specific tasks. However, this process requires access to relevant data and some technical expertise, which might pose challenges for smaller organizations.

What are the limitations of Phi models compared to larger models?

Phi models may struggle with highly specialized knowledge and have limits on context window size, meaning they cannot process extremely long documents in one go. Additionally, while they perform well, they may generate incorrect information that requires verification.

How is Microsoft planning to further develop the Phi series?

Microsoft is focused on ongoing improvements to the Phi series, including expanding multimodal capabilities and enhancing quantization techniques. These advancements aim to boost performance and accessibility on a variety of devices, potentially enhancing usability for different industries.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.