Qwen: Alibaba's Open-Source AI Models Guide

Discover Alibaba's Qwen open-source models, covering Qwen 2.5, multilingual support, and Apache 2.0 licensing in this comprehensive guide.

Introduction

Qwen is Alibaba Cloud’s family of open source AI models. The name stands for Tongyi Qianwen, which is Alibaba’s AI assistant platform, launched in April 2023. These Qwen models, including Qwen 2.5, are designed for developers and researchers needing robust language processing capabilities without vendor lock-in. Supporting over 29 languages, Qwen models are available under the Apache 2.0 license, allowing unrestricted commercial use. The latest release, Qwen 2.5, enhances performance across coding, mathematics, and multilingual tasks, as detailed in Alibaba Cloud’s announcement. Companies worldwide are adopting Qwen due to its competitiveness with leading models like Llama and Mistral AI, and its commitment to open source principles, as highlighted in TechCrunch’s coverage. The models vary in size from 0.5B to 72B parameters, offering compatibility with various hardware setups, with Qwen models driving AI accessibility and Alibaba’s global AI presence.

What is Qwen and How It Works

Qwen represents a suite of large language models developed by Alibaba Cloud’s research team. Comparable to models such as GPT, Llama, and Claude, Qwen models use transformer-based architecture, which is standard in most modern AI systems. However, Qwen differentiates itself with a focus on multilingual capabilities. It was trained using datasets in 29 languages, including English, Chinese, Spanish, French, German, Japanese, Korean, and Arabic. Qwen offers various versions: base models, instruction-tuned models for chat, and specialized versions for coding, known as Qwen-Coder. Released in September 2024, the Qwen 2.5 series includes models from 0.5 billion to 72 billion parameters, suitable for consumer hardware or server-grade GPUs. All models are released under the Apache 2.0 license, permitting modifications, distribution, and commercial use without licensing fees.

Why Qwen Exists and Its Purpose

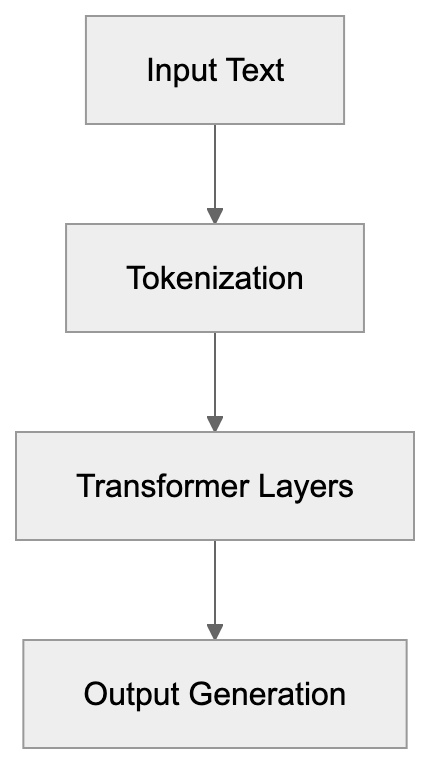

Qwen Model Architecture Overview:

Alibaba developed Qwen to solidify its position in the global open source AI ecosystem. Faced with restrictions on Western AI technologies, Chinese tech companies, including Alibaba, saw the need for homegrown alternatives. However, Qwen’s intent is global. Released globally, Qwen competes with Meta’s Llama and Mistral AI, aiming for worldwide adoption by developers. Open sourcing these models serves multiple purposes: building good relations and attracting talent to Alibaba’s AI ecosystem, garnering feedback and improvements from a global developer community, and establishing Alibaba Cloud as a significant AI infrastructure provider. Qwen models power Alibaba’s products such as the Tongyi Qianwen chatbot and their e-commerce tools. By offering strong multilingual support, Qwen targets markets where English-only models fall short. The Apache 2.0 license eliminates legal barriers that can hinder the adoption of some commercial AI models.

How Businesses and Developers Use Qwen

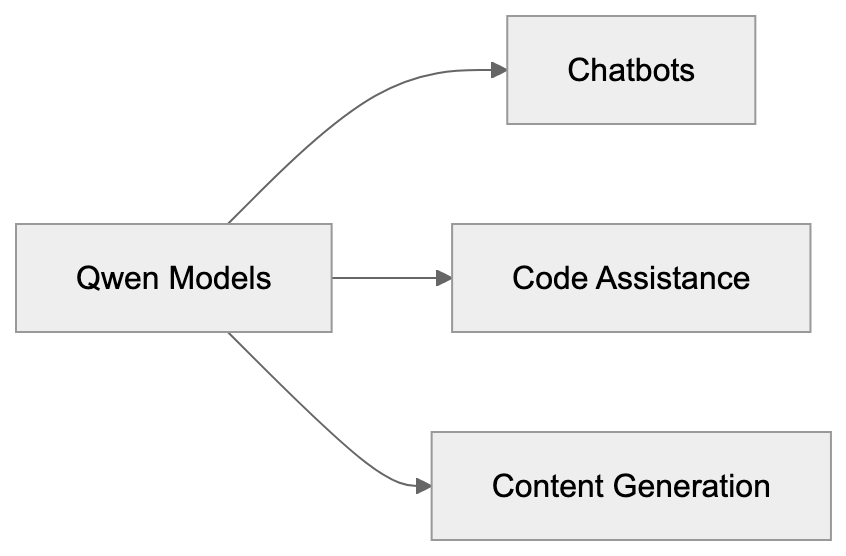

Developers leverage Qwen models for applications like chatbots, content generation, code assistance, and data analysis. These models integrate with popular frameworks like Hugging Face Transformers, vLLM, and Ollama. Smaller Qwen models can run on local machines, while larger versions are deployable on cloud infrastructure. Qwen’s strong performance in languages beyond English makes it particularly appealing to companies in Asia, Southeast Asia, and the Middle East. E-commerce platforms utilize it for automating product descriptions and customer service. Meanwhile, software development teams use Qwen-Coder for code completion and debugging. The models can be fine-tuned for domain-specific data, such as legal documents or healthcare records. Instruction-tuned versions of Qwen accurately follow prompts, making them ideal for tasks like data extraction and summarization. Researchers favor Qwen as a baseline for experiments, given its fully accessible weights, and some companies prefer it over closed models for auditing systems and ensuring data privacy by avoiding external API interactions.

Qwen 2.5 Performance and Benchmarks

Qwen Use Cases and Applications:

Qwen 2.5 demonstrates competitive performance against leading open source models. On the MMLU benchmark, which assesses general knowledge, the 72B model achieves a score of 86.8%, rivaling Llama 3.1 70B’s 87%. For coding, Qwen 2.5 Coder scores 65% on HumanEval compared to Llama 3.1 405B’s 89% and Mistral Large 2’s 92%. In mathematical reasoning, on the MATH benchmark, Qwen 2.5 72B scores 61.2%, while Llama 3.1 405B achieves 73.8%. These benchmarks underscore Qwen’s effective competitiveness. Significantly, Qwen excels in multilingual benchmarks like MGSM, surpassing Llama and Mistral due to Qwen’s multilingual training focus. The smaller models also perform well for their parameter size. Qwen 2.5 7B outperforms Llama 3.1 8B across most benchmarks despite having fewer parameters. Global adoption is increasing, with companies in Japan, Korea, UAE, Singapore, and Brazil reporting successful Qwen usage, especially in areas facing restrictions or high latency with Western cloud APIs.

Comparing Qwen to Alternative AI Models

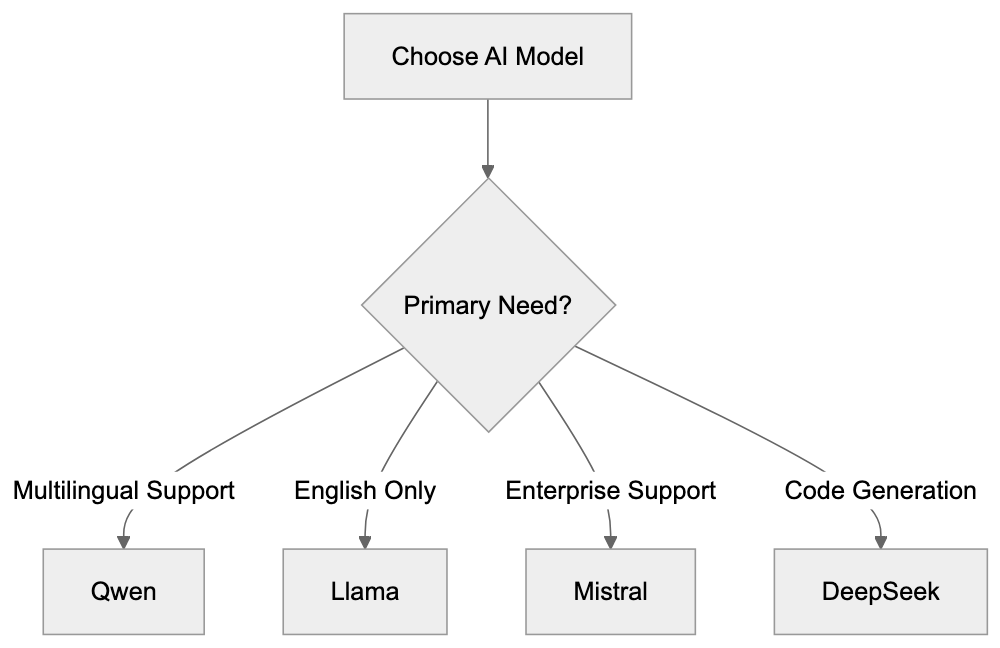

Here’s a comparison of Qwen against major open source alternatives:

| Model | Max Size | Languages | License | Coding | Math Score | Best For |

|---|---|---|---|---|---|---|

| Qwen 2.5 | 72B | 29 | Apache 2.0 | Strong | 58% MATH | Multilingual apps |

| Llama 3.1 | 70B | English-focused | Llama 3.1 405B | Excellent | 73.8% MATH | English applications |

| Mistral Large 2 | 123B | Multilingual | Apache 2.0 | Strong | 75.3% MATH | Enterprise use |

| DeepSeek-V2 | 236B | Multilingual | MIT | Excellent | 65.2% MATH | Code generation |

| Gemma 2 | 27B | Multilingual | Gemma License | Good | 64.9% MATH | Edge deployment |

Qwen is distinctive for its Apache 2.0 license, which is more permissive than Llama’s custom license that restricts usage above 700 million monthly active users. Qwen imposes no such limits. Mistral’s licenses also include commercial restrictions depending on the model version. While DeepSeek offers larger models, documentation and support are mainly in Chinese. Gemma from Google focuses on effectiveness over raw capability. For developers seeking strong multilingual support and full commercial freedom, Qwen offers an excellent balance. Llama remains a strong choice for purely English applications due to its larger community support and integrations. Mistral is ideal for enterprise deployments where support contracts are crucial. Each model holds unique strengths, but Qwen’s blend of performance, licensing, and multilingual capability makes it competitive globally.

Model Comparison Decision Flow:

Getting Started with Qwen Models

Accessing Qwen models is simplified through multiple platforms. Hugging Face hosts all versions, offering easy download and inference options, including benchmarks, usage examples, and fine-tuning guides. For local deployment, Ollama provides simple commands to run Qwen models on your machine. For instance, you can install Ollama and use the command “ollama run qwen2.5:7b” to initiate a session. For production deployments, vLLM and TGI (Text Generation Inference) offer enhanced serving capabilities. Cloud platforms like Alibaba Cloud, AWS, and Azure also list Qwen in their model catalogs. The official Qwen GitHub repository provides model weights, training code, and evaluation scripts. Smaller models like Qwen 2.5 7B efficiently run on consumer GPUs with 16GB VRAM, while the 14B and 32B versions require 24GB to 48GB VRAM. The largest 72B model needs multiple GPUs or high-end server hardware. The community is growing via Discord servers and GitHub discussions. Documentation includes API usage, prompt engineering tips, and fine-tuning workflows. Qwen models utilize standard tokenizers compatible with existing tools, ensuring easy integration into current workflows.

Licensing and Commercial Use

Qwen models operate under the Apache 2.0 license, granting extensive permissions, including commercial use without royalties. You are free to modify the models, redistribute changes, and integrate Qwen into proprietary products. The license mandates including copyright notices and indicating any modifications. Unlike some AI licenses, Apache 2.0 imposes no restrictions based on company size or usage scale, contrasting with Llama 3’s license, which requires Meta’s special approval for services exceeding 700 million monthly users. Certain Mistral model versions are restricted to non-commercial research. Apache 2.0 includes patent grants, legally protecting users from potential Alibaba patent infringement claims within license terms. For businesses, this licensing clarity is crucial, eliminating the need for custom agreements or concerns about usage caps. The primary obligation is straightforward attribution. This permissive licensing strategy positions Qwen as a strong competitor against closed models from OpenAI and Anthropic, where API costs and usage restrictions limit flexibility.

Future Development and Community

Alibaba is committed to the ongoing development of the Qwen family. New versions are released every few months, featuring performance enhancements. Qwen 3, expected in 2025, will feature larger context windows and improved reasoning abilities. The team is also working on specialized models for domains like medicine, law, and finance. As more developers adopt Qwen, community contributions are increasing, leading to new tools, fine-tuned versions, and applications. Qwen is being referenced increasingly in research papers as a baseline for comparisons. International adoption is growing, extending beyond Asia to Europe, the Middle East, and Latin America. Developers appreciate that Qwen maintains pace with advanced research while remaining open source. Competitive benchmark scores demonstrate that performance doesn’t have to be sacrificed for openness. Alibaba’s investment in Qwen signals a long-term commitment to the open source AI ecosystem. As Alibaba competes with Meta, Mistral, and others, continued improvements in Qwen are expected, offering developers a viable alternative to closed platforms with the freedom to customize and deploy as needed.

End

Qwen represents Alibaba’s significant foray into open source AI. It competes effectively with models like Llama and Mistral while offering superior multilingual capabilities. The Apache 2.0 license removes many commercial barriers faced by other models. Qwen models range from 0.5B to 72B parameters, suitable for various hardware configurations. Qwen 2.5 achieves strong results in coding, math, and language understanding benchmarks. International adoption is growing, especially in non-English markets where Qwen’s language support excels. Whether needed for chatbots, coding assistants, or content generators, Qwen provides a solid foundation. Its active development roadmap and expanding community indicate that Qwen will remain competitive as AI technology progresses. For developers and businesses seeking open source AI solutions without compromises, Qwen deserves serious consideration alongside more established options.

Frequently Asked Questions

What types of applications can benefit from using Qwen models?

Qwen models are versatile and can be used in various applications, including chatbots, content generation, code assistance, and data analysis. They are particularly effective in automating tasks like customer service interactions and product descriptions, making them valuable for e-commerce platforms.

How do I access and run Qwen models?

Qwen models can be accessed via platforms like Hugging Face, which offers easy download and inference options. For local deployment, tools like Ollama allow you to run models on your machine using simple commands. Developers can also leverage cloud platforms like Alibaba Cloud, AWS, and Azure for production use.

What hardware specifications are required to run different Qwen models?

The hardware requirements vary by model size. Smaller models like Qwen 2.5 7B can run on consumer GPUs with as little as 16GB of VRAM. Larger models, such as the 72B version, necessitate high-end server hardware or multiple GPUs, typically requiring 48GB or more of VRAM.

How does Qwen compare in performance to other open-source AI models?

Qwen 2.5 demonstrates competitive performance across various benchmarks, particularly excelling in multilingual capabilities. While it competes closely with models like Llama and Mistral, its strong focus on various languages and the absence of usage restrictions make it appealing for diverse applications.

What are the licensing conditions for using Qwen models?

Qwen models are under the Apache 2.0 license, allowing for extensive rights such as commercial use without royalties. Users can modify and redistribute the models, provided they include appropriate copyright notices. This licensing approach eliminates common limitations found in other AI models.

How does the Qwen community contribute to the model's development?

The Qwen community is actively growing, providing valuable feedback and contributions that lead to new tools, fine-tuned models, and applications. Increased collaboration through platforms like GitHub and Discord also enhances model capabilities, reflecting a commitment to open source development.

What future developments can we expect from Qwen?

Alibaba plans to release new versions of Qwen regularly, with updates focusing on enhanced performance and additional features. Future developments may include larger context windows and specialized models targeting specific domains such as finance and healthcare, ensuring Qwen remains competitive.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.