StableLM: Stability AI's Open Language Model Explained

Complete guide to StableLM covering model family, licensing, performance benchmarks, and how it compares to other open source language models.

Introduction

StableLM is an integral part of the open-source AI movement, developed by Stability AI. These open language models provide developers and researchers the freedom to access robust AI tools through open-source AI without being hampered by restrictive AI model licensing, as emphasized in Stability AI’s launch announcement. Designed to understand and generate human-like text, StableLM powers diverse applications, including chatbots, code assistants, and more, as detailed in TechCrunch’s coverage. The StableLM models vary in size from 3 billion to 65 billion parameters, trained on vast, varied datasets for versatility, as reported by Ars Technica. Stability AI offers these models under permissive licenses, benefiting businesses by allowing commercial use without incurring fees. The StableLM family encompasses base models and refined versions like Stable Beluga, specially fine-tuned for following instructions and engaging in conversations.

What is StableLM

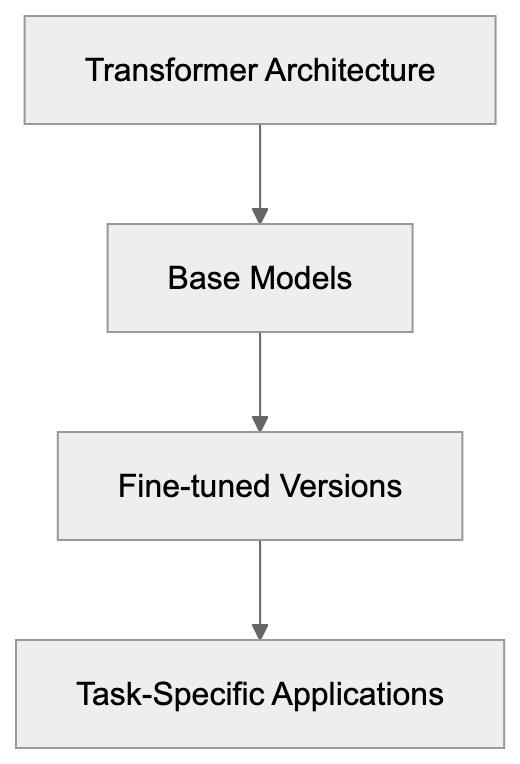

StableLM Model Architecture Overview:

StableLM, a suite of open-source large language models, was first introduced by Stability AI in April 2023. Utilized in constructing these models is the transformer architecture, the same technology behind leading modern language models like GPT. StableLM models vary in parameter sizes; smaller models like StableLM 3B have 3 billion parameters, while larger models boast up to 65 billion. Generally, more parameters indicate better performance, though this requires more computational resources. The base models undergo training on an open dataset known as The Pile and additional datasets totaling around 1.5 trillion tokens, enhancing their language comprehension and reasoning capabilities. Accessibility is a hallmark of Stability AI’s design, allowing smaller models to be operable on consumer hardware, while larger models demand more powerful systems yet remain more accessible than some proprietary alternatives. Open language models from StableLM are freely available with public weights and code.

Why StableLM Exists and Its Purpose

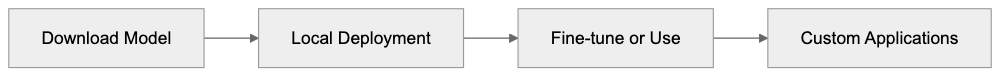

StableLM Development and Usage Flow:

Stability AI’s StableLM democratizes access to language AI, breaking free from the grip of big tech companies that control most powerful language models. The accessibility of open-source AI like StableLM lets developers download model weights and execute them locally. This level of openness grants autonomy without reliance on external services. StableLM’s purpose goes beyond accessibility, facilitating research into language AI to study the model’s workings, evaluate biases, and enhance safety protocols. Small businesses and startups leverage StableLM for building AI products affordably, without per-call API charges or service dependency. StableLM’s versatility allows fine-tuning on user-specific data, crafting bespoke AI tools tailored to distinct needs.

How StableLM is Used

StableLM supports a broad spectrum of applications, from chatbots and content generation to code assistance. Developers rely on these open-source AI models in diverse ways: small businesses automate customer support by fine-tuning models with their product information, developers utilize StableLM for generating digital content, and researchers use it as an experimental platform for AI safety studies. The model’s adaptability even extends to content marketers who incorporate StableLM for keyword research and crafting meta descriptions, enhancing SEO strategies. For privacy-sensitive tasks, like those in medical practices or legal firms, StableLM is deployed locally to ensure data remains within secure confines.

StableLM Model Family and Versions

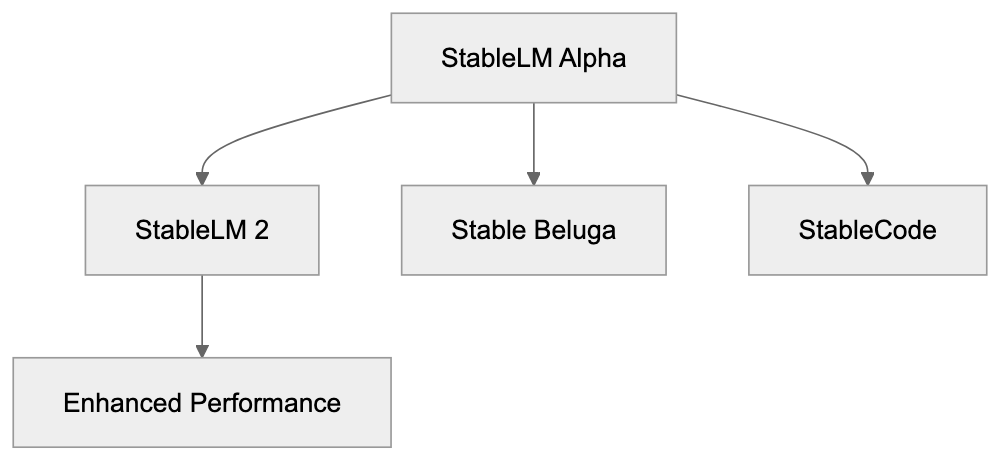

The StableLM models include several releases that cater to varied needs based on size, training, and specialization. The initial Alpha models, introduced in 3B and 7B parameter sizes, were released in April 2023. Following this, Stability AI unveiled larger 15B and 65B models. StableLM 2 is an upgrade with advanced training data and techniques, showing better performance across benchmarks, available in 1.6B, 3B, and 12B sizes. Among fine-tuned models, Stable Beluga stands out for conversational tasks, and StableCode specifically supports programming ambitions. Each version fulfills unique functionalities, whether enhancing instruction adherence or facilitating coding tasks.

Licensing and Commercial Use

StableLM Model Family Evolution:

StableLM’s open language models employ different licensing schemes. Most releases fall under the Creative Commons CC BY-SA-4.0 license, allowing commercial use provided that modifications and attribution are shared accordingly. Some versions of StableLM 2 use the Stability AI Non-Commercial Research Community License, with commercial versions offered under separate agreements, underscoring the diverse AI model licensing landscape. This flexible licensing appeals to businesses eager to create commercial solutions without royalties, differing from more restrictive open-source alternatives. A comprehensive understanding of licensing, like Mistral’s Apache 2.0 or LLaMA’s non-commercial terms, is crucial for production deployment.

Performance and Benchmarks

The performance of StableLM’s open language models varies depending on size and version, benchmarked for tasks like reasoning and language comprehension. StableLM 3B delivers commendable results for its size, scoring 40-45% on MMLU. Higher parameter models tend to perform better but require augmented resources. StableLM 2 represents enhanced capabilities with the 12B version competing well against other open-source AI models in various tasks. Stable Beluga’s strength lies in instruction-following and conversational benchmarks, like MT-Bench, yet it acknowledges that closed-source models like GPT-4 outperform it in complexity.

Comparison with Alternative Open Models

| Model | Size Range | License Type | Training Data | Best Use Case |

|---|---|---|---|---|

| StableLM | 1.6B-65B | CC BY-SA-4.0 / Custom | 1.5T tokens | General purpose, fine-tuning |

| LLaMA 2 | 7B-70B | Custom commercial | 2T tokens | Commercial applications |

| Mistral | 7B | Apache 2.0 | Undisclosed | Effective, deployment |

| Falcon | 7B-180B | Apache 2.0 | 1.5T tokens | High-performance tasks |

| MPT | 7B-30B | Apache 2.0 | 1T tokens | Commercial products |

StableLM is a direct competitor in the open language models arena, offering a variety of sizes and specialized versions. While LLaMA 2 from Meta sometimes surpasses StableLM on benchmarks, it involves complex licensing for larger deployments. Mistral and Falcon extend competition with their Apache 2.0 licensing, though Falcon’s larger models demand extensive hardware. MPT models excel at inference speed, catering to commercial aims. StableLM distinguishes itself with versatile size options and ready-to-use fine-tunes like Stable Beluga.

Technical Requirements and Deployment

Running StableLM necessitates suitable hardware aligned with model size. Smaller models like StableLM 2 1.6B run on consumer-grade GPUs with 8GB VRAM, while larger models demand greater resources. StableLM 3B requires about 12GB, and 65B models need multiple high-end GPUs. Deployment leverages frameworks such as Hugging Face Transformers, and support is extensive across both PyTorch and TensorFlow. Efficiency improvements like quantization (e.g., 8-bit and 4-bit) significantly lower memory requirements, albeit at modest performance costs. Cloud services like AWS provide options to host StableLM, balancing between upfront and ongoing expenses. Optimizations like vLLM and TensorRT enhance inference speed, crucial for reducing latency in end-user applications.

The Stability AI Ecosystem

Stability AI’s ecosystem extends beyond open-source language models to include solutions like Stable Diffusion for images and Stable Audio for sound. This curation of versatile AI tools, unified under openness, empowers developers to creatively combine capabilities for comprehensive applications. Competing with other AI hubs like Hugging Face, Stability AI’s collaborative ethos pushes the envelope in open AI services.

Fine-tuning and Customization

StableLM’s fine-tuning capabilities allow adaptation to specific needs, by training models on custom datasets for optimal performance. Techniques like parameter-efficient fine-tuning (through Hugging Face’s PEFT library) and LoRA offer pragmatic customization while conserving memory resources. Full fine-tuning, though resource-intensive, ensures maximal flexibility. Following fine-tuning, evaluating models on tailored tasks ensures targeted improvements over base models, employing tools like cloud GPUs where necessary.

Challenges and Limitations

Users of StableLM face certain limitations. The model may generate inaccurate outputs, a common challenge known as hallucination in all language models. Bias, reflecting the nuances of training data, also demands vigilant testing, especially for fairness. Despite its strengths, StableLM trails behind proprietary giants like GPT-4 in handling intricate reasoning tasks. Resource demands pose hurdles for some users, mitigated partially by techniques like quantization. Community-based support underscores open-source usage, with forums and documentation essential for resolving issues.

End

StableLM signifies Stability AI’s commitment to open language models, offering versatile, open-source AI tools that empower both developers and businesses. Its diverse model family caters to varied hardware capabilities and application needs. Specialized versions such as Stable Beluga furnish users with predefined solutions tailored for frequent requirements. The permissive licensing structure supports commercial ventures without restrictive fees. Although it lags behind market leaders like GPT-4 in peak performance, StableLM’s engagement within the open AI movement provides a valuable foundation for those seeking alternatives to proprietary AI services.

Frequently Asked Questions

What are the hardware requirements for running StableLM models?

Running StableLM requires hardware that matches the model size. Smaller models like StableLM 2 1.6B can operate on consumer-grade GPUs with 8GB of VRAM, while larger models demand more powerful systems—65B models may require multiple high-end GPUs to function effectively.

Can I use StableLM for commercial applications?

Yes, StableLM is available under flexible licenses that allow for commercial use. Most models fall under the Creative Commons CC BY-SA-4.0 license, which permits commercial applications, provided that modifications and attributes are shared. Some versions may require separate commercial agreements.

How can I fine-tune StableLM models for specific tasks?

StableLM models can be fine-tuned using custom datasets for better performance on targeted tasks. Techniques such as parameter-efficient fine-tuning through Hugging Face's PEFT library and LoRA enable customization while minimizing resource use, ensuring flexibility.

What types of applications can utilize StableLM?

StableLM supports a wide range of applications, including chatbots, content generation, code assistance, and even privacy-sensitive tasks. Businesses can use it for automating customer support, while researchers can explore AI safety by experimenting with the model.

What challenges might I face when using StableLM?

Users may encounter common challenges such as generating inaccurate outputs, known as hallucinations, and biases stemming from training data. Additionally, while StableLM is capable, it may not perform as well as proprietary models like GPT-4 in handling complex reasoning tasks.

How does StableLM compare to other language models?

StableLM competes with various open models by offering a range of sizes and specialized versions. While models like LLaMA 2 sometimes outperform StableLM on benchmarks, StableLM's licensing allows for greater flexibility in commercial applications compared to more restrictive alternatives.

Where can I find community support for StableLM?

Community support for StableLM is available through forums, documentation, and user groups. Engaging with these resources can help resolve issues quickly and provide additional insights into best practices for utilizing StableLM effectively.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.