Understanding AI2Bot: Allen Institute's AI Crawler Explained

Learn about AI2Bot, Allen Institute's web crawler for open-source AI training. How it works, its purpose, and impact on AI research.

What is AI2Bot and Why Does It Matter

AI2Bot is a web crawler operated by the Allen Institute for AI, known as AI2. This crawler systematically browses the internet to collect data for training open-source AI models. Such web crawlers, including AI2Bot, are essential tools in modern AI development. They gather the massive amounts of text data required to train language models and other AI systems.

The Allen Institute for AI developed this crawler specifically to support its research initiatives, especially for training its OLMo (Open Language Model) family. Unlike many commercial AI companies that keep their training data private, AI2 focuses on open-source development and transparent research practices. Therefore, the data collected by AI2Bot contributes to creating AI models that researchers and developers can freely access and study.

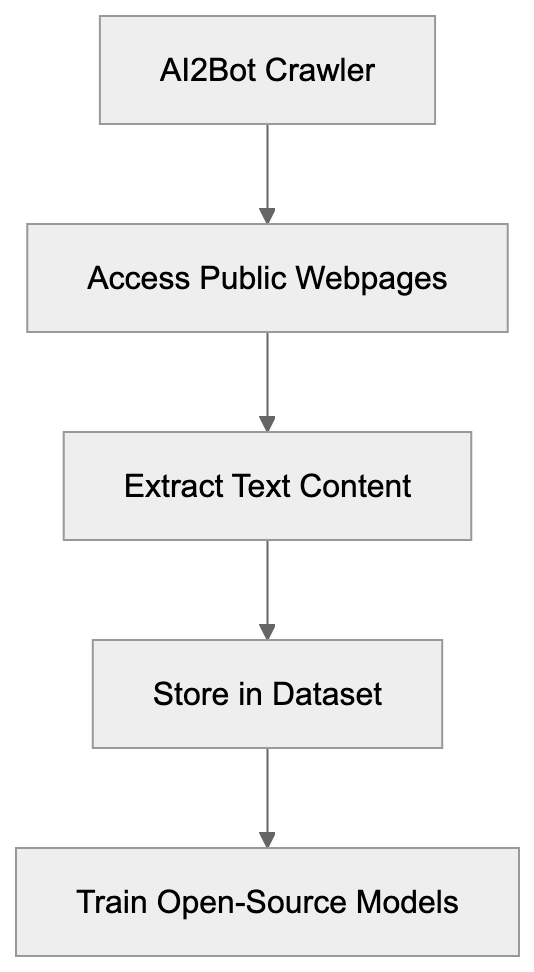

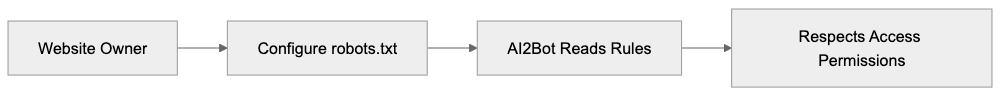

Web crawlers scan websites, extract text content, and store it in datasets. AI2Bot follows the same principle but specifically targets content useful for AI model training. The crawler respects robots.txt files and provides clear identification so website owners can manage how AI2 accesses their content.

The Allen Institute for AI Mission and Goals

AI2Bot Web Crawling Process:

Founded in 2014 by the late Paul Allen, co-founder of Microsoft, the Allen Institute for AI functions as a non-profit research institute dedicated to advancing artificial intelligence for the common good. Its primary focus centers on conducting high-impact AI research and making results openly available to the scientific community.

AI2 pursues several key research areas including natural language processing, computer vision, and reasoning systems. The institute employs over 100 researchers, engineers, and staff members who work on various AI projects. Unlike profit-driven tech companies, AI2 prioritizes transparency and open science principles in its work.

Over the years, the institute has released multiple open-source AI models and datasets, such as Semantic Scholar, various language models, and extensive training datasets. AI2Bot is pivotal in this mission as it collects the raw data needed to train these open-source models.

Its commitment to openness extends to publishing research papers, sharing code repositories, and providing free access to its AI tools. This approach allows smaller research teams and organizations to benefit from advanced AI technology without massive infrastructure investments.

How AI2Bot Works and Its User-Agent String

AI2Bot identifies itself through a specific user-agent string when accessing websites. Typically, the user-agent string appears as “AI2Bot (+https://allenai.org/crawler)” or similar variations. This identification allows website administrators to recognize the crawler and manage its access through robots.txt configurations.

The crawler operates by sending HTTP requests to web pages, similar to how a regular web browser works. However, instead of rendering pages for human viewing, AI2Bot extracts and stores text content for dataset creation. The process involves following links page to page, building a comprehensive collection of web content over time.

Website owners can control AI2Bot access in several ways. The robots.txt file lets administrators specify which parts of their site the crawler can or cannot access. Additionally, the crawler respects standard web protocols like rate limiting to avoid overwhelming servers with too many requests.

AI2 provides contact information and documentation about its crawler. Website owners who want to block AI2Bot or have questions about data usage can reach out to the institute directly. This transparency differs from some commercial crawlers that operate with less clear documentation.

The data collected gets processed and filtered before becoming part of training datasets. AI2 removes duplicate content, filters out low-quality text, and organizes the data for effective model training. This preprocessing ensures the final datasets provide maximum value for AI research.

The OLMo Project and Open-Source AI Training

OLMo stands for Open Language Model, representing AI2’s flagship effort in creating fully open language models. The project launched publicly in 2024 with the goal of providing complete transparency in AI model development. This includes releasing not just the final models but also training data, code, and evaluation frameworks.

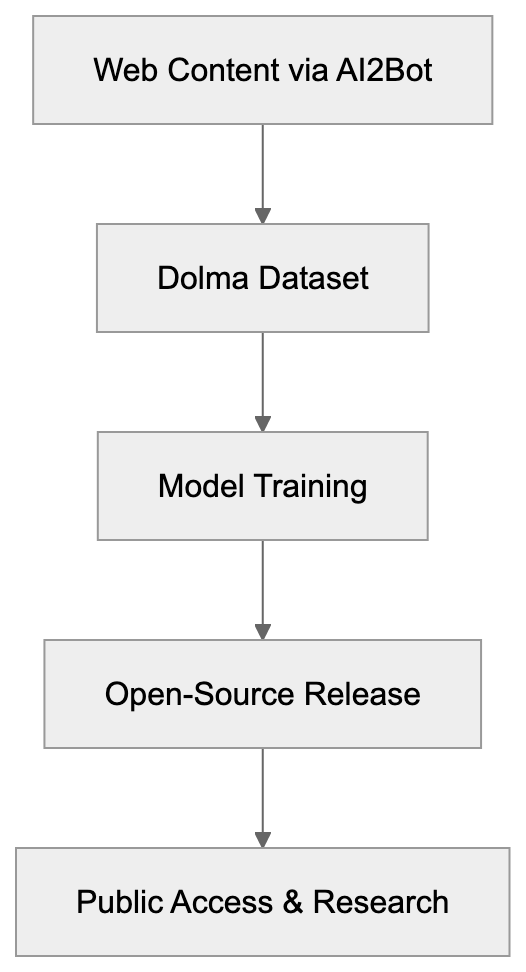

Most commercial language models like GPT or Claude keep their training data and methods private. OLMo takes the opposite approach by documenting every step of the model creation process. AI2Bot plays an important role by gathering web content that becomes part of OLMo’s training data, known as Dolma.

Dolma is a massive dataset containing billions of tokens of text from various sources. AI2Bot contributes web page content to this dataset alongside other sources like academic papers and code repositories. The dataset is released publicly so other researchers can reproduce OLMo’s training or use it for their projects.

The OLMo models come in different sizes to accommodate various use cases and hardware constraints. Researchers can download these models and run them on their infrastructure without licensing fees or usage restrictions. This accessibility promotes new ideas and allows smaller teams to experiment with state-of-the-art AI technology.

Training open-source models requires significant computational resources. AI2 has invested in high-performance computing infrastructure to train OLMo models from scratch. The training process can take weeks or months depending on model size and involves processing the entire Dolma dataset multiple times.

AI2Bot Compared to Other AI Crawlers

Several organizations operate web crawlers for AI training purposes, each with different policies, transparency levels, and purposes. Understanding these differences helps website owners make informed decisions about data access.

| Crawler | Organization | Primary Purpose | Opt-Out Method | Open-Source Models |

|---|---|---|---|---|

| AI2Bot | Allen Institute for AI | Training OLMo and research | robots.txt, contact form | Yes |

| CCBot | Common Crawl | Public dataset creation | robots.txt | N/A (dataset only) |

| GPTBot | OpenAI | Training GPT models | robots.txt | No |

| Google-Extended | Training Bard/Gemini | robots.txt | No | |

| ClaudeBot | Anthropic | Training Claude | robots.txt | No |

OLMo Development Pipeline:

Common Crawl’s CCBot creates publicly available web archives rather than training specific models. Researchers worldwide use Common Crawl data for various purposes including AI training. The dataset is updated monthly and contains petabytes of web content.

GPTBot from OpenAI collects data specifically for training GPT models. OpenAI provides limited information about its training data sources and does not release models as open-source. Website owners can block GPTBot through robots.txt but cannot access the resulting models freely.

Google-Extended crawls content for training Google’s AI products like Bard and Gemini. Google keeps training data and model architectures proprietary. The company does offer some AI tools publicly, but not the underlying models themselves.

ClaudeBot supports Anthropic’s Claude AI assistant development. Like OpenAI and Google, Anthropic maintains privacy around training data specifics. The company emphasizes AI safety research but does not release open-source versions of Claude.

AI2Bot stands out for its commitment to full transparency and open-source release. Website owners who allow AI2Bot access contribute to publicly available AI research rather than exclusively commercial products.

Impact on AI Research and Innovation

Open-source AI models like OLMo democratize access to advanced AI technology. Small research labs, universities, and independent developers can study and modify these models without expensive API fees or usage restrictions. This accessibility accelerates new ideas across the AI research community.

Transparent training data allows researchers to better understand model biases and limitations. When training data remains hidden, diagnosing why models behave certain ways becomes nearly impossible. OLMo’s open approach lets researchers trace model behavior back to specific training examples.

The AI research community benefits from reproducible results. Other teams can verify AI2’s findings by retraining OLMo models using the same data and code. This reproducibility strengthens scientific rigor in AI research compared to closed commercial development.

Educational institutions use open-source models like OLMo for teaching AI concepts. Students can experiment with real language models and understand how training data influences model behavior. This hands-on learning proves more valuable than just using commercial AI APIs.

Developers building specialized AI applications can fine-tune OLMo models for specific domains. Medical researchers might adapt the model for clinical text analysis, while legal professionals could customize it for contract review. Open-source availability enables these domain-specific innovations.

The competitive pressure from open-source projects pushes commercial AI companies toward better practices. When transparent alternatives exist, closed-source providers face more scrutiny about their data practices and model capabilities.

Managing AI2Bot Access to Your Website

Website owners have control over whether AI2Bot can access their content. The primary method involves configuring the robots.txt file in your site’s root directory. This file tells crawlers which parts of your site they can access.

AI2Bot Access Control Flow:

To completely block AI2Bot, add these lines to robots.txt:

User-agent: AI2Bot

Disallow: /This configuration prevents the crawler from accessing any pages on your site. Alternatively, you can allow access to some sections while blocking others by specifying different paths after Disallow.

Some website owners prefer allowing AI2Bot while blocking commercial crawlers. This choice supports open-source research while restricting commercial data collection. You can create separate rules for each crawler in robots.txt.

AI2 provides contact information for website owners with specific concerns or questions. The institute’s website includes details about its data practices and how it uses collected content. It typically responds to inquiries about crawler behavior or data usage.

Monitoring server logs helps identify AI2Bot activity on your site. Look for the AI2Bot user-agent string in access logs to see which pages the crawler visited and how frequently. This information helps assess the crawler’s impact on server resources.

Rate limiting can manage crawler traffic without completely blocking access. Most web servers support configuring maximum request rates per user-agent. This approach allows AI2Bot to collect data while preventing server overload.

Privacy Considerations and Data Usage

AI2Bot collects publicly accessible web content, meaning pages available without login requirements or paywalls. The crawler does not access password-protected areas or attempt to bypass security measures. This practice aligns with standard ethical web crawling guidelines.

The collected data becomes part of training datasets like Dolma, which AI2 releases publicly. This means content accessed by AI2Bot could appear in open datasets that anyone can download. Website owners should consider this when deciding whether to allow crawler access.

Personal information on public web pages might get included in training data. AI2 implements filtering processes to remove sensitive information, but automated systems cannot catch everything. Sites containing personal data should carefully consider robots.txt configurations.

AI2 states it respects copyright and intellectual property rights, but questions about AI training and copyright remain legally unsettled in many jurisdictions. Website owners concerned about copyright should consult legal advice regarding crawler access.

The institute’s non-profit status and research focus differ from commercial AI companies. Data collected by AI2Bot supports academic research rather than profit-generating products. Some content creators find this distinction meaningful when making access decisions.

Transparency in AI2’s data practices exceeds most commercial alternatives. The organization documents data sources, processing methods, and usage clearly. This openness allows informed decision-making by website owners and content creators.

The Future of Open-Source AI Development

Open-source AI models continue gaining traction as alternatives to closed commercial systems. Projects like OLMo demonstrate that transparent development can produce competitive results. More research institutions and organizations are likely to adopt similar approaches.

The demand for training data will keep growing as AI models become larger and more sophisticated. Web crawlers like AI2Bot will play increasingly important roles in gathering varied text content. Balancing data collection needs with creator rights remains an ongoing challenge.

Regulatory frameworks around AI training data are evolving worldwide. New laws might require more explicit consent for using web content in AI training. Crawlers that prioritize transparency like AI2Bot may adapt more easily to changing regulations.

Collaboration between open-source projects could accelerate development. Multiple research institutions might share datasets and training resources to collectively build better models. AI2Bot’s data could contribute to broader collaborative efforts.

Advances in data quality filtering will improve training dataset value. Better automated systems for removing low-quality or problematic content mean crawlers can collect data more effectively. AI2 continues developing these filtering technologies.

The gap between open-source and commercial AI capabilities may narrow over time. As open projects like OLMo mature, they could match or exceed proprietary alternatives in specific domains. This competition benefits the entire AI ecosystem.

End

AI2Bot represents the Allen Institute for AI’s commitment to open-source AI research and transparent development practices. The crawler collects web data for training models like OLMo, which are released publicly for anyone to use and study. This approach differs significantly from commercial AI companies that keep training data and models proprietary.

Website owners can manage AI2Bot access through robots.txt configurations and have more transparency about data usage compared to many alternatives. The crawler supports academic research and open-source development rather than exclusively commercial products. Understanding AI2Bot’s role helps make informed decisions about allowing AI training data collection.

The open-source AI movement continues growing with projects like OLMo leading the way. AI2Bot plays an essential part in gathering the training data needed for these transparent and accessible AI models. As AI technology evolves, the balance between data collection and creator rights will remain an important ongoing conversation.

Frequently Asked Questions

What types of data does AI2Bot collect?

AI2Bot collects publicly accessible web content, including text from web pages that do not require logins or subscriptions. This data is then used to train open-source AI models like OLMo, ensuring it is available for research and development purposes.

How can I block AI2Bot from accessing my site?

Website owners can block AI2Bot by using the robots.txt file. To prevent AI2Bot from accessing any part of your site, you can add the following lines: User-agent: AI2Bot

Disallow: /.

Does AI2Bot respect privacy concerns?

Yes, AI2Bot adheres to ethical web crawling standards by not accessing password-protected areas. While AI2 implements filtering to remove sensitive information from collected data, it's important for website owners to consider privacy when allowing crawler access.

Can I use the data collected by AI2Bot for my own projects?

Yes, the data collected by AI2Bot is intended for public use, especially in developing open-source AI models. Researchers and developers can use datasets like Dolma for various AI projects without facing licensing fees.

What distinguishes AI2Bot from other web crawlers?

AI2Bot is notable for its commitment to transparency and open-source development. Unlike many commercial crawlers, it allows website owners to manage access and openly shares collected data for academic research rather than for profit generation.

How does AI2 ensure the quality of the data collected?

AI2 employs filtering processes to remove duplicate and low-quality content from the datasets. By preprocessing the gathered data, AI2 ensures that the training data used for models like OLMo is of high value and quality for AI research.

What impact does AI2Bot have on the AI research community?

AI2Bot significantly contributes to the AI research community by providing open access to high-quality training data. This democratization allows smaller labs and independent developers to innovate and conduct experiments, fostering a collaborative and open research environment.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.