Understanding Baiduspider: Baidu's Search Crawler Explained

Explore Baiduspider, Baidu's powerful search crawler. Understand its role in indexing, user-agent strings, and its connection to ERNIE AI.

Introduction

Baiduspider, the web crawler of Baidu, is central to Baidu search, the leading search engine in China, holding over 70% of the search engine market share in the country. Think of it like Googlebot, but specifically designed for the Chinese internet ecosystem. Web crawlers, like Baiduspider, function as automated bots that explore websites, interpret their content, and index everything for search engines. Without crawling technology such as Baiduspider, search engines wouldn’t know what content exists on the web or how to rank it. Baiduspider plays an important role in how millions of Chinese websites get discovered and ranked on Baidu, which is the most important search engine for Chinese users. The Baidu crawler connects to Baidu’s AI systems, including ERNIE AI, which processes and understands the content it collects, enhancing Baidu’s AI capabilities. For developers and website owners targeting Chinese markets, understanding how Baiduspider works is crucial for visibility in Baidu’s search results.

What is Baiduspider

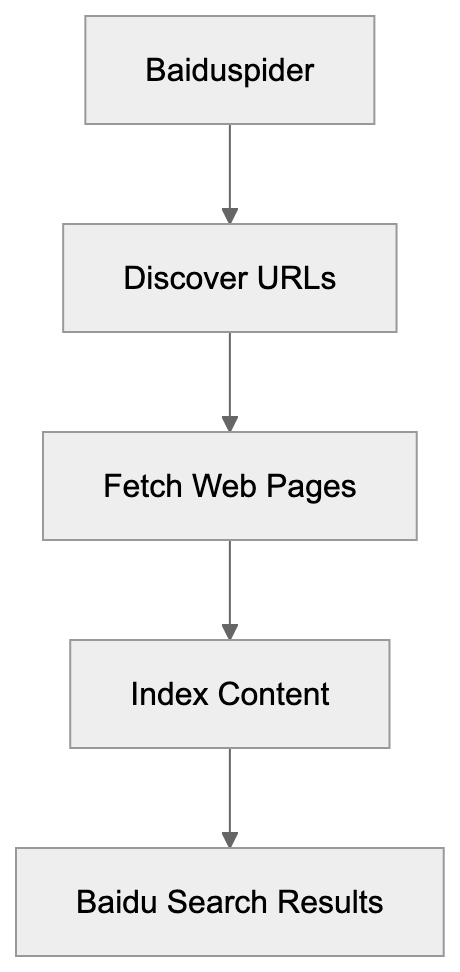

Web Crawling Process:

Baiduspider is the official web crawler operated by Baidu Inc. It systematically browses the internet to find and download web pages for Baidu’s search index, ensuring up-to-date search results. The Chinese search bot continuously visits websites, follows links from one page to another, and reads HTML content, JavaScript, CSS files, and other resources to understand the page content. The Baidu crawler uses specific user-agent strings to identify itself when making requests to web servers. These user-agent strings look something like “Mozilla/5.0 (compatible; Baiduspider/2.0),” informing website owners of Baidu’s presence. The collected data is sent back to Baidu’s servers to be processed, analyzed, and included in their massive search index. Without this constant activity, Baidu couldn’t provide up-to-date search results.

Why Baiduspider Exists and Its Purpose

Baidu created Baiduspider to build and maintain their search index for the Chinese market. Holding over 70% of the search engine market share in China, Baidu is the most important search engine for Chinese users. The crawler serves multiple purposes beyond basic indexing. It helps Baidu understand content quality, detect spam, identify duplicate content, and assess page relevance for search queries. Baiduspider also feeds data into Baidu’s AI systems, including ERNIE AI, which is a language model similar to GPT. The crawler effectively handles both simplified and traditional Chinese characters while respecting local regulations and content requirements specific to the Chinese internet. For businesses targeting Chinese consumers, getting indexed by Baiduspider is necessary for online visibility. The crawler also helps Baidu maintain its competitive edge against international search engines with a limited presence in China.

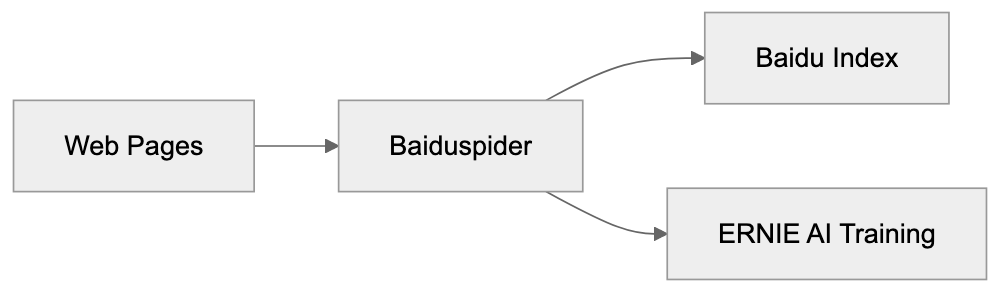

Baiduspider Data Flow:

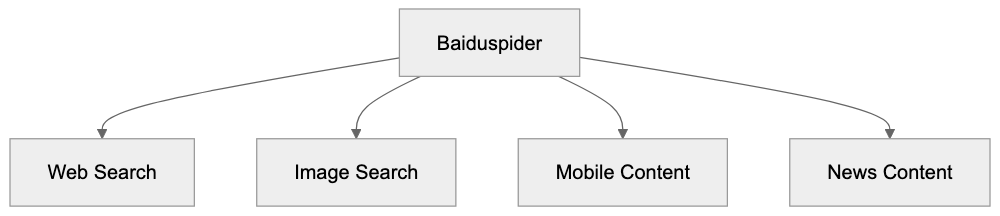

How Baiduspider is Used

Website owners and developers interact with Baiduspider primarily through their server logs and robots.txt files. The robots.txt file controls which pages Baiduspider can or cannot crawl on your site. Many content management systems and web hosting platforms automatically log visits from Baiduspider alongside other search engine bots. Developers can identify Baiduspider traffic by checking for its user-agent strings in server logs. Baidu operates several different versions of Baiduspider for distinct purposes, including web search, image search, news, and mobile content. Each variant has slightly different user-agent strings. Website owners can verify legitimate Baiduspider visits through reverse DNS lookups on IP addresses that should resolve to baidu.com domains. The crawler respects standard crawl delay directives in robots.txt files, making proper configuration vital for SEO professionals focusing on the Chinese market. Some websites may block or limit Baiduspider if they are not targeting Chinese audiences.

Baiduspider User-Agent Strings

Understanding Baiduspider user-agent strings helps developers properly identify and manage crawler traffic. The main web crawler uses user-agent strings like “Mozilla/5.0 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html).” Different Baidu services use specific identifiers. For instance, Baiduspider-image identifies the image crawler, while Baiduspider-video targets video content. News content is crawled by Baiduspider-news, and mobile content is accessed by Baiduspider-mobile. These user-agent strings are crucial for server configuration and analytics. They can help create specific rules in your robots.txt file or web server configuration. Some websites serve different content to Baiduspider compared to regular users, known as cloaking, though this practice can be risky. The user-agent string also includes a URL to Baidu’s documentation about their spider. Developers should inspect their analytics tools to monitor how often Baiduspider visits their sites and which pages it frequently accesses.

Connection to ERNIE AI

Baiduspider feeds data directly into Baidu’s AI ecosystem, including ERNIE AI. ERNIE stands for Enhanced Representation through kNowledge Integration, Baidu’s version of models like GPT-4. The Baidu crawler collects massive amounts of Chinese language content, which becomes training data for ERNIE. This connection between crawling technology and AI training is similar to how other search engines use crawler data to enhance their AI systems. The quality and extent of Baiduspider’s crawling directly affect ERNIE’s language understanding capabilities. Baidu has stated that ERNIE is trained on trillions of web pages, most of which were discovered and indexed by Baiduspider. The crawler helps ERNIE AI stay updated with current events and content trends. This integration signifies that Baiduspider isn’t just indexing for search; it also gathers training data for AI development. The relationship between the Baidu crawler and ERNIE AI represents a shift in how search engines view web crawling, transcending mere indexing to feeding intelligent systems.

Baiduspider vs Other Search Crawlers

Different search engines use varied crawlers with distinct capabilities and focus areas. Here’s a comparison of Baiduspider and other major alternatives:

| Crawler | Search Engine | Primary Market | AI Integration | Special Features |

|---|---|---|---|---|

| Baiduspider | Baidu | China | ERNIE AI | Chinese language optimization, local regulations compliance |

| Googlebot | Global | Gemini | Advanced JavaScript rendering, mobile-first indexing | |

| Bingbot | Bing | Global | Copilot (GPT-4) | Powers Microsoft Copilot search, excellent image indexing |

| Yandexbot | Yandex | Russia/CIS | YandexGPT | Cyrillic language focus, regional optimization |

| DuckDuckBot | DuckDuckGo | Global | No AI | Privacy-focused, uses multiple sources |

Crawler Variants:

Baiduspider differs from Googlebot in several ways. It emphasizes Chinese language content and follows different crawling priorities based on content type. Baiduspider tends to crawl Chinese websites more frequently and thoroughly than international crawlers do. It must operate within Chinese regulatory frameworks, affecting content prioritization. Compared to Bingbot, Baiduspider exhibits deeper integration with local Chinese platforms and services. While Googlebot may be more advanced in rendering JavaScript-heavy sites, Baiduspider is optimized for the types of sites common in the Chinese internet ecosystem. For websites targeting multiple markets, you will likely observe traffic from several of these crawlers in your logs.

Managing Baiduspider on Your Website

To control how Baiduspider interacts with your site, several methods are available. The robots.txt file is the primary control mechanism. You can completely block Baiduspider by adding “User-agent: Baiduspider” followed by “Disallow: /” in your robots.txt. Alternatively, permit Baiduspider while restricting specific directories. The crawl-delay directive can slow down Baiduspider if it overwhelms your server. Server-side configuration provides another layer of control. You can use .htaccess files on Apache servers or Nginx configuration to manage Baiduspider access. Some administrators block Baiduspider entirely to reduce server load if they aren’t targeting Chinese markets. Meta robots tags on individual pages can prevent indexing even if crawling is allowed. The noindex tag instructs Baiduspider to crawl but not index specific pages. Monitoring server logs reveals Baiduspider’s behavior on your site. Look for patterns in crawl frequency, which pages it visits most, and any errors encountered. Baidu Webmaster Tools offers official resources for managing how your site appears in Baidu search, though it requires verification and registration.

Technical Specifications and Behavior

Baiduspider operates on specific technical parameters affecting how it crawls websites. The web crawler typically respects the robots exclusion protocol and follows standard web crawling etiquette. It sends HTTP requests with identifying headers, including the Baiduspider user-agent string. The crawler handles both HTTP and HTTPS protocols. Response to redirects works similarly to other major crawlers, following 301 and 302 redirects appropriately. Baiduspider’s crawl rate varies based on site authority, update frequency, and server response times. High-authority sites with frequently updated content get crawled more often. The crawler can execute some JavaScript, though its capabilities might differ from Googlebot’s rendering engine. Baiduspider respects the meta refresh tag and canonical tags for managing duplicate content. The crawler handles cookies and can maintain session state when necessary. XML sitemaps submitted through Baidu Webmaster Tools help guide crawling priorities. The Baidu crawler typically identifies itself honestly and doesn’t disguise its identity, unlike some malicious scrapers.

Best Practices for Baiduspider Optimization

Website owners targeting Chinese markets should follow specific practices for Baiduspider. First, ensure your hosting infrastructure provides good connectivity to China. Slow server response times from Chinese locations can reduce crawl frequency. Use simplified Chinese characters for content targeting mainland China users. Submit your sitemap through Baidu Webmaster Tools to help the crawler find all your pages. Keep your robots.txt file clean and properly formatted. Test it using Baidu’s robots.txt validator tool. Ensure your site functions well without heavy JavaScript rendering since Baiduspider’s JavaScript capabilities might be limited. Create content valuable to Chinese users and follow local content guidelines. Avoid duplicate content issues by using canonical tags properly. Monitor your crawl stats in Baidu Webmaster Tools to identify and resolve any crawling problems. Fix broken links and 404 errors promptly, as these waste crawler resources. Consider your site’s mobile version since mobile search dominates in China. Use appropriate meta tags, including descriptions and keywords, which still hold value in Baidu SEO. Building quality backlinks from reputable Chinese websites can increase crawl priority.

End

Baiduspider serves as Baidu’s eyes on the web, continuously crawling and indexing content for China’s dominant search engine. The Baidu crawler is similar to Googlebot and other major search engine bots but is specifically optimized for Chinese language content and the Chinese internet ecosystem. Its integration with ERNIE AI showcases how modern search crawlers do more than just index; they also gather training data for artificial intelligence systems. Understanding Baiduspider’s user-agent strings, behavior patterns, and technical specifications helps developers and website owners effectively manage their sites. Whether you’re targeting Chinese markets or simply want to understand the global search crawler scene, knowing how Baiduspider works provides valuable insight. The crawler continues to evolve alongside Baidu’s search technology and AI development, making it an essential component of the global search engine infrastructure. For anyone working with websites intended for Chinese audiences, properly configuring your site for Baiduspider is critical for visibility in the world’s most populous internet market.

Frequently Asked Questions

How can I ensure my website is indexed by Baiduspider?

To ensure Baiduspider indexes your website, create and submit an XML sitemap through Baidu Webmaster Tools. Additionally, configure your robots.txt file to allow crawling, and use proper HTML structures with clear navigation for better discoverability.

What should be included in the robots.txt file for Baiduspider?

Your robots.txt file should specify user-agent directives for Baiduspider and outline which pages or directories it is allowed to crawl. You can use 'User-agent: Baiduspider' followed by 'Disallow: /' to block all access or specify particular folders to restrict.

How often does Baiduspider crawl websites?

The crawl frequency of Baiduspider depends on several factors, including the website's authority, the frequency of updated content, and server response times. High-authority sites with regular updates are crawled more frequently than those with static content.

What are the implications of blocking Baiduspider?

Blocking Baiduspider may lead to your website not appearing in Baidu search results, which can significantly reduce visibility within the Chinese market. If you are not targeting Chinese users, blocking it might help conserve server resources.

How can I monitor Baiduspider's activity on my site?

You can monitor Baiduspider's activity by checking server logs for its user-agent strings. Additionally, Baidu Webmaster Tools provides specific insights into how often your site is crawled along with any issues encountered.

What role does ERNIE AI play in relation to Baiduspider?

ERNIE AI, Baidu's language model, relies on the data collected by Baiduspider to enhance its language understanding capabilities. The content Baiduspider indexes becomes training data for ERNIE, showcasing the connection between web crawling and AI development.

What optimizations should I consider for Baiduspider SEO?

For Baiduspider SEO optimization, ensure your site hosts fast connectivity to China, use simplified Chinese for content targeting, create a sitemap, and maintain a clean robots.txt file. Regularly monitor crawl stats and fix any broken links to enhance your site's crawl efficiency.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.