ChatGLM-Spider: The Zhipu AI Crawler for Model Training

Learn about ChatGLM-Spider by Zhipu AI, its role in ChatGLM model training, user-agent details, and how to block it from your website.

What is ChatGLM-Spider

ChatGLM-Spider is a web crawler operated by Zhipu AI, a Chinese artificial intelligence company based in Beijing. This AI web crawler collects web data to train ChatGLM language models. Unlike search engines that index pages for search purposes, the ChatGLM-Spider gathers training data for AI models. Companies developing large language models need vast amounts of text data. They deploy crawlers to gather information from publicly accessible websites, often using a robots.txt file to manage crawler access. ChatGLM-Spider serves this purpose for Zhipu AI’s ChatGLM model family. The Zhipu AI crawler identifies itself through a specific user-agent string when visiting websites. Website owners can detect and control this bot’s access through standard web protocols. Understanding these crawlers is crucial as they directly impact how AI models learn and what data they contain.

Why ChatGLM-Spider Exists

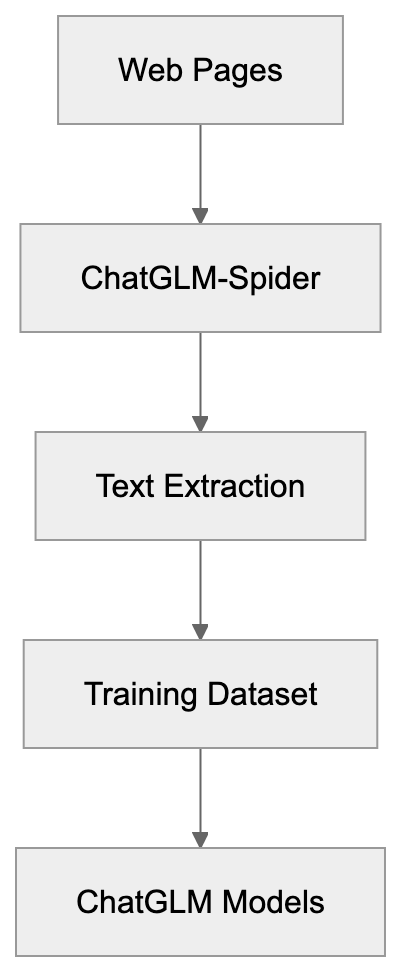

ChatGLM-Spider Operation Overview:

Zhipu AI created the ChatGLM-Spider to enhance their Chinese language models. Large language models require billions of text examples to function properly. The ChatGLM series focuses on Chinese language understanding and generation. Acquiring quality Chinese text data at scale mandates automated collection methods. Manual data gathering would be both time-consuming and costly. Web crawlers solve this challenge by automatically visiting millions of pages. The ChatGLM training bot reads content, processes it, and adds useful text to training datasets. This data trains the ChatGLM models to understand language patterns, facts, and context. Without crawlers like this, building competitive Chinese AI models would be nearly impossible.

How ChatGLM-Spider Works

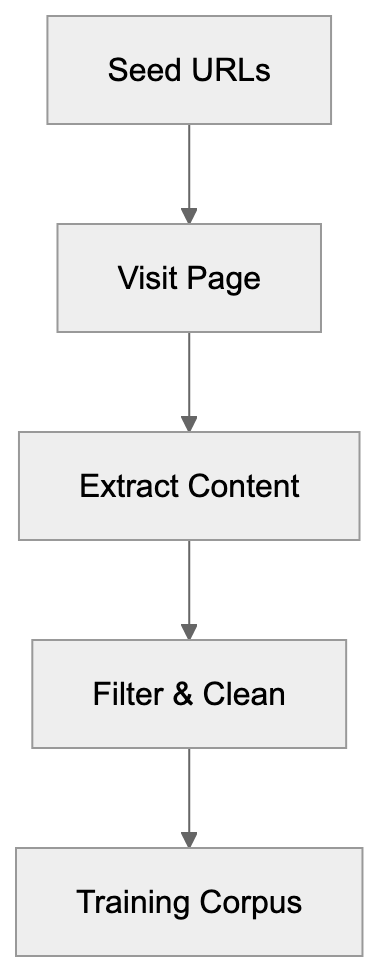

The ChatGLM training bot operates similarly to other web crawlers. It starts with seed URLs and follows links to find new pages. When the crawler visits a page, it sends an HTTP request with a specific user-agent string. The user-agent for ChatGLM-Spider typically appears as:

Mozilla/5.0 (compatible; ChatGLM-Spider/1.0; +https://www.zhipuai.cn)

This identifier informs website servers about the bot’s request. The crawler downloads the page content and extracts text data. It filters out non-content elements like navigation bars and ads. The bot respects robots.txt files, which provide instructions on what areas of a site crawlers can access. After data collection, the system processes and cleans the text. This cleaned data becomes part of the training corpus. The crawler likely runs continuously to gather fresh content, with Zhipu AI updating their models regularly with new data.

Web Crawler Data Collection Process:

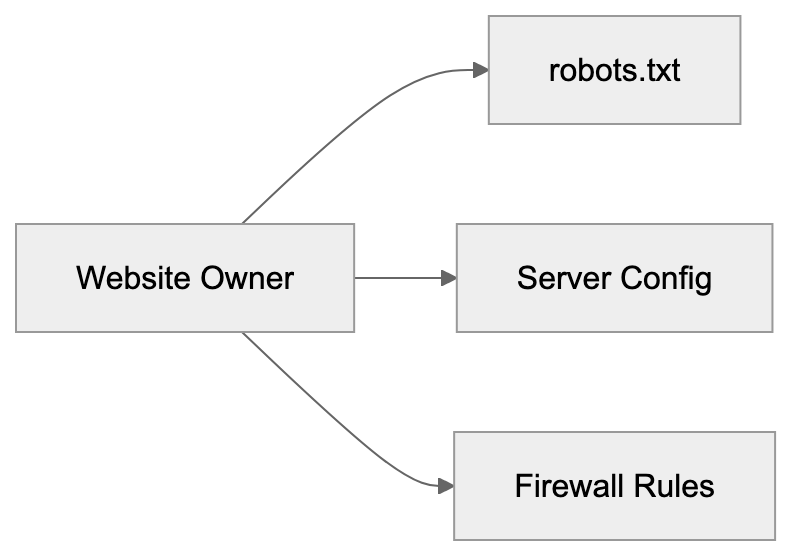

Blocking ChatGLM-Spider from Your Website

Website owners can control whether the ChatGLM-Spider accesses their content. The most common method is using a robots.txt file in the website’s root directory to direct crawlers on their access permissions. To block ChatGLM-Spider completely, add these lines to your robots.txt:

User-agent: ChatGLM-Spider

Disallow: /If you want to allow some access but restrict certain areas, specify paths like:

User-agent: ChatGLM-Spider

Disallow: /private/

Disallow: /user-data/Another option is server-level blocking, configuring web servers to return 403 errors when detecting the ChatGLM-Spider user-agent. This works even if the bot ignores robots.txt. Most web servers like Apache and Nginx support user-agent-based blocking. You can also use firewall rules to block IP ranges, although this is less reliable due to distributed crawlers. The robots.txt method remains the standard and most respected approach.

ChatGLM-Spider vs Other AI Crawlers

Many companies run AI crawlers to collect training data, each with different focuses and behaviors. Here’s how the Zhipu AI crawler, ChatGLM-Spider, compares to major alternatives:

| Crawler Name | Company | Primary Focus | Robots.txt Compliance | Geographic Focus |

|---|---|---|---|---|

| ChatGLM-Spider | Zhipu AI | Chinese language models | Expected | China |

| GPTBot | OpenAI | General-purpose LLMs | Yes | Global |

| Google-Extended | Bard/Gemini training | Yes | Global | |

| CCBot | Common Crawl | Open dataset creation | Yes | Global |

| ClaudeBot | Anthropic | Claude model training | Yes | Global |

| Bytespider | ByteDance | Multiple AI products | Mixed reports | Global |

Access Control Methods:

ChatGLM-Spider stands out due to its focus on Chinese content. While GPTBot and Google-Extended target global multilingual data, ChatGLM-Spider prioritizes Chinese-language websites, aligning with ChatGLM’s specialization in Chinese language tasks, as detailed in Wikipedia’s article on robots.txt. All these crawlers should respect robots.txt directives, but compliance levels can vary.

The ChatGLM Model Family

Understanding ChatGLM-Spider involves knowing about the models it supports. ChatGLM is a series of bilingual language models from Zhipu AI, handling both Chinese and English but excelling at Chinese language tasks. The first public version, ChatGLM-6B, was a 6 billion parameter model released as an open-source project. Later versions, ChatGLM2-6B and ChatGLM3-6B, improved capabilities. Larger commercial versions are available through their API platform. These models compete with international offerings like GPT-4 and Claude in the Chinese market, powering applications like chatbots, content generation, and question-answering systems. The data collected by ChatGLM-Spider directly influences these models’ performance.

Privacy and Data Usage Concerns

When the ChatGLM-Spider crawls your website, it collects publicly accessible content, raising data usage and privacy questions. The crawler gathers text from public pages, and if your site has user-generated content, that content might end up in training data. This concerns many website owners and content creators. Unlike search engines that index content, AI crawlers use data to train models, which can generate new content based on learned patterns. You have no control over how trained models utilize these patterns. Blocking the crawler is your best option to prevent your content from being used, as data removal post-training is nearly impossible.

Legal and Ethical Considerations

Web scraping for AI training exists in a legal gray area. In many jurisdictions, collecting publicly accessible data is legal, but how that data is used can create legal issues. Copyright concerns arise when models closely reproduce training data. Terms of service violations can occur if websites explicitly prohibit automated data collection. Different countries have different rules about web scraping and data usage. Zhipu AI must comply with Chinese internet and data protection laws. Website owners in other countries may have limited legal recourse if they object to crawling. The ethical debate around AI training data continues to evolve.

Impact on Website Performance

AI web crawlers like ChatGLM-Spider can affect your website’s performance and costs. Each crawler visit uses server resources and bandwidth. If the ChatGLM-Spider crawls aggressively, it may slow down user page loads. Excessive crawling can increase hosting costs, especially on bandwidth-limited plans. Legitimate crawlers implement rate limiting to avoid overloading servers, spacing out requests, and minimizing server strain. If performance issues arise, check server logs for crawler activity, looking for the ChatGLM-Spider user-agent string in access logs to assess request volumes.

To manage crawl load, you can implement crawl-delay directives in robots.txt:

User-agent: ChatGLM-Spider

Crawl-delay: 10This instructs the crawler to wait 10 seconds between requests. Although not all crawlers respect crawl-delay, it’s worth trying. Server-side rate limiting provides more reliable protection.

The Future of AI Web Crawlers

AI training crawlers will likely become more common as more companies develop language models. Already, crawlers from OpenAI, Google, Anthropic, Meta, and others are active. Chinese AI companies besides Zhipu AI also run their own crawlers, creating a complex scene for website owners. Managing access for multiple AI crawlers could become burdensome. Industry standards may emerge to simplify crawler management. Some proposals suggest unified opt-out mechanisms for AI training data collection. Website owners could signal once whether they allow AI training data collection, simplifying management across crawlers. However, achieving global agreement on such standards faces challenges due to varied legal jurisdictions.

Frequently Asked Questions

What steps can I take to block ChatGLM-Spider from accessing my website?

You can block ChatGLM-Spider by adding specific directives to your robots.txt file. To deny all access, include 'User-agent: ChatGLM-Spider' followed by 'Disallow: /'. For selective access, you can specify particular paths to restrict.

How does ChatGLM-Spider collect data from websites?

ChatGLM-Spider operates similarly to other web crawlers by starting with seed URLs and following links to discover new pages. It sends HTTP requests with a unique user-agent string to identify its activity and gathers text while filtering out non-content elements.

Can I control how my website's data is used by ChatGLM-Spider?

While you can block ChatGLM-Spider to prevent it from accessing your data, once the data is collected, you have little control over its use in AI training. The best way to protect your content is to prevent access before data collection occurs.

What are the potential legal implications of AI web crawlers like ChatGLM-Spider?

The legality of web scraping for AI purposes often exists in a gray area. While collecting publicly accessible data is typically legal, issues arise over how that data is subsequently used, especially in terms of copyright and terms of service violations.

How do crawlers affect the performance of my website?

Crawlers can consume server resources and bandwidth, potentially slowing down your website or increasing hosting costs. To manage this, you can implement rate limiting or crawl-delay directives in your robots.txt file to control the frequency of requests from crawlers.

What distinguishes ChatGLM-Spider from other AI crawlers?

ChatGLM-Spider is specifically designed to enhance Chinese language models, while other crawlers like GPTBot and Google-Extended target a broader, multilingual audience. This specialization in Chinese content makes ChatGLM-Spider distinct in its operational goals and approach.

What is the future of AI web crawlers?

As AI models grow in popularity, the use of web crawlers is expected to increase, potentially leading to more standardized practices for managing crawler access. Proposals for unified opt-out mechanisms may simplify the process for website owners, although achieving consensus amidst varying legal frameworks remains challenging.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.