Understanding DeepSeekBot: AI Training Crawler Explained

Learn how DeepSeekBot crawls the web for AI training, its user-agent string, blocking methods, and how it compares to other AI crawlers.

What is DeepSeekBot?

DeepSeekBot is a web crawler operated by DeepSeek, a Chinese AI company developing large language models and AI assistants. DeepSeekBot crawls websites across the internet to collect text data used for DeepSeek AI training, enabling the development of advanced AI models. Web crawlers like DeepSeekBot are essential because AI models require massive amounts of text to learn, facilitating the creation of sophisticated language models. Without such crawlers, AI companies would struggle to gather enough training data, hindering the advancement of AI technologies. DeepSeek, launched in 2023, quickly gained attention for its competitive AI models, and DeepSeekBot emerged as the company scaled up its operations, contributing to the rapid development of AI technologies. Like OpenAI, Anthropic, and Google crawlers, DeepSeekBot scans publicly accessible web pages to gather content for DeepSeek’s training datasets. It automates web scraping, respects robots.txt files (when properly configured), and can be identified by its user-agent string, which is detectable and can be blocked if needed.

Why DeepSeekBot Exists and Its Purpose

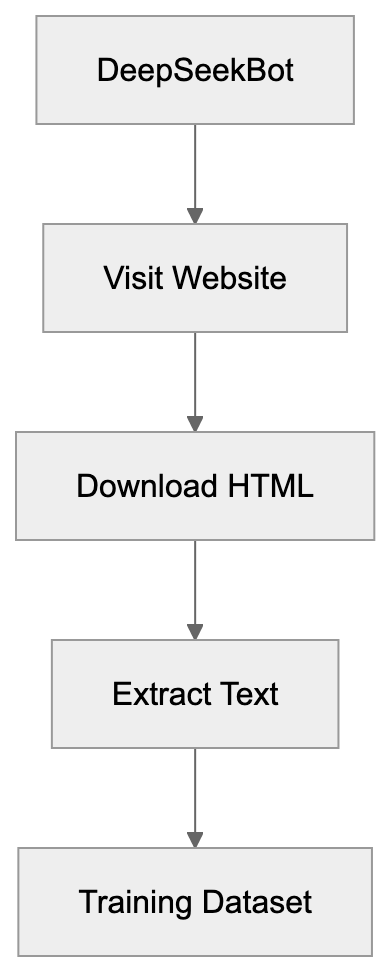

DeepSeekBot Web Crawling Process:

AI models learn by analyzing large volumes of text data. The greater the variety and completeness of the training data, the better the model performs. DeepSeekBot serves the crucial function of automatically gathering training data from the internet. Without web crawlers, DeepSeek would need to manually collect text or purchase costly datasets. Web crawling offers a cost-effective solution to building large training datasets. The bot’s purpose is simple: collect as much quality text as possible from public websites, including articles, forum posts, and educational content. It visits websites, reads HTML content, extracts text, and stores it for later training. DeepSeek uses this data to improve its language models’ understanding of various linguistic elements. Competing with major players like OpenAI and Anthropic requires comparable training data volumes. DeepSeekBot helps level the field by providing access to the same web content other AI companies crawl. The bot runs continuously, revisiting sites to capture new and updated content over time.

How DeepSeekBot is Used in Practice

DeepSeek operates DeepSeekBot as part of its data collection infrastructure. Running on DeepSeek’s servers, the bot follows a list of URLs to crawl, starting with popular websites and following links to find new pages. When DeepSeekBot visits a webpage, it downloads the HTML and extracts readable text, filtering out code, scripts, and styling. The extracted text is cleaned and formatted before being added to DeepSeek’s training datasets, which feed into the training pipeline for DeepSeek’s language models. Website owners usually notice DeepSeekBot in their server logs, identified by its user-agent string:

Mozilla/5.0 (compatible; DeepSeekBot/1.0; +https://deepseek.com)

This user-agent string signals that the visitor is DeepSeekBot rather than a human browser. The URL points to DeepSeek’s website, where more information about the crawler may be available. Web developers can identify and potentially block the bot using this user-agent. Although it’s expected to respect the robots.txt file, some owners report that DeepSeekBot crawls aggressively, making many requests in short periods, potentially increasing server load and bandwidth costs.

DeepSeekBot User-Agent and Technical Details

The DeepSeekBot user-agent string is a key identifier for this crawler, typically appearing as:

Mozilla/5.0 (compatible; DeepSeekBot/1.0; +https://deepseek.com)

Variations may exist depending on the version. The “Mozilla/5.0” prefix is standard for many bots to maintain compatibility with web servers, and the “compatible” tag indicates browser-like behavior. The “DeepSeekBot/1.0” portion identifies the specific crawler and version, while the URL provides a reference point for webmasters seeking more information. Website owners can check access logs for this user-agent string to see if DeepSeekBot has visited their site. Logs typically record each request along with user-agent, timestamp, requested URL, and response code. Frequent requests from this user-agent indicate active crawling by DeepSeekBot. Like a regular browser, the bot makes HTTP GET requests, following the same protocols and handling redirects and error codes appropriately. Unlike malicious scrapers, legitimate AI crawlers like DeepSeekBot typically identify themselves honestly through their user-agent.

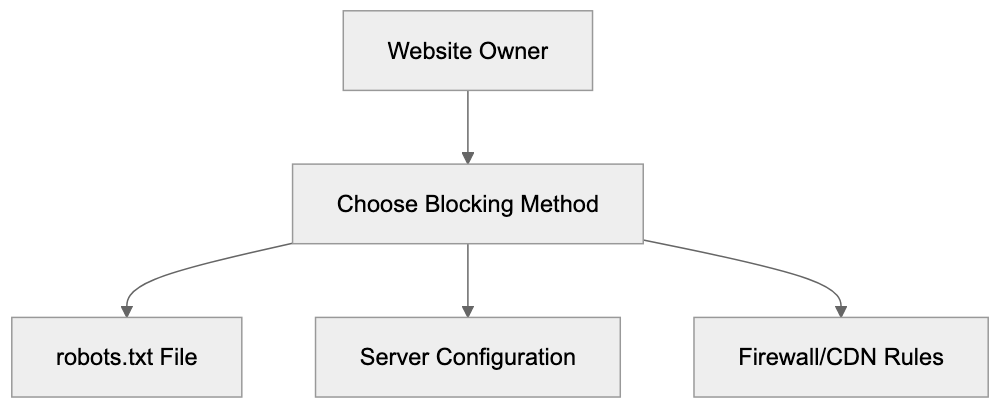

How to Block DeepSeekBot

Website owners who prefer not to have DeepSeekBot crawl their content have several blocking options. The most common method is updating the robots.txt file, located in the root directory of your website, to instruct crawlers on which parts of the site to avoid. To block DeepSeekBot completely, add these lines to your robots.txt file:

User-agent: DeepSeekBot

Disallow: /

Blocking Methods Overview:

To block specific sections, specify those paths instead, for example:

User-agent: DeepSeekBot

Disallow: /private/

Disallow: /admin/Another option is server-level blocking. Configure your web server (Apache, Nginx, etc.) to return an error code when it detects the DeepSeekBot user-agent. For Nginx:

if ($http_user_agent ~* DeepSeekBot) {

return 403;

}For Apache, use .htaccess:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} DeepSeekBot [NC]

RewriteRule .* - [F,L]AI Crawler Landscape:

These server-level blocks immediately reject requests from DeepSeekBot without delivering content. A third option is using a firewall or CDN that supports user-agent blocking. Services like Cloudflare allow you to create firewall rules to block specific user agents. Remember, blocking AI crawlers means your content won’t be included in those AI models’ training datasets, potentially affecting AI assistants’ ability to reference or understand your content.

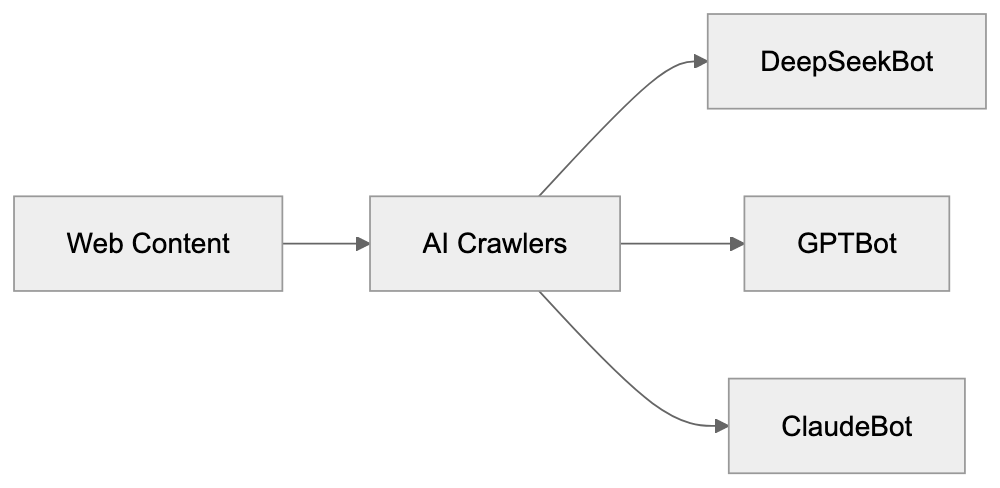

Comparing DeepSeekBot to Other AI Crawlers

DeepSeekBot is one of many AI crawlers scanning the web. Here’s how it compares to alternatives:

| Crawler Name | Company | User-Agent String | Robots.txt Support | Geographic Origin |

|---|---|---|---|---|

| DeepSeekBot | DeepSeek | DeepSeekBot/1.0 | Expected | China |

| GPTBot | OpenAI | GPTBot/1.0 | Yes | USA |

| CCBot | Common Crawl | CCBot/2.0 | Yes | USA |

| ClaudeBot | Anthropic | ClaudeBot/1.0 | Yes | USA |

| Google-Extended | Google-Extended | Yes | USA | |

| Bytespider | ByteDance | Bytespider | Partial | China |

All these crawlers serve similar purposes for different companies. GPTBot collects data for OpenAI’s models like GPT-4, while CCBot gathers for Common Crawl, widely used by AI companies. Claude-Web supports Anthropic’s Claude models, Google-Extended aids Google, and Bytespider supports ByteDance’s AI initiatives. DeepSeekBot joins this competitive landscape as DeepSeek vies for a position in the AI space. The main differences lie in the companies behind each crawler and their crawling policies. Most major AI crawlers from US companies publicly document their behavior and provide clear opt-out mechanisms. DeepSeek has been less transparent about DeepSeekBot’s crawling patterns and policies, with some website owners reporting more aggressive behavior compared to GPTBot or Claude-Web. Geographic origin matters for data privacy and regulatory concerns, as Chinese AI crawlers may raise different compliance questions than US or European ones, especially for sites handling sensitive data.

DeepSeek Company Background and Growth

DeepSeek is a Chinese AI startup founded in 2023, focusing on large language models and AI assistants competing with ChatGPT and Claude. DeepSeek released models like DeepSeek-V2 and DeepSeek-Coder, gaining traction in the AI community. The company saw rapid growth in 2024, attracting users seeking alternatives to US-based AI services. Its models demonstrated competitive performance on benchmarks, bolstering China’s efforts in domestic AI capabilities. DeepSeek operates a chat interface similar to ChatGPT, allowing user interaction with its AI models. The company also released some models as open source, enabling developers to download and use them, helping expand the user base and gather feedback. DeepSeek’s growth trajectory aligns with the AI boom following ChatGPT’s launch. As the company scaled up, it required more training data, likely driving DeepSeekBot’s deployment to systematically collect web content. While the size of DeepSeek’s training datasets isn’t disclosed, competitive language models typically train on hundreds of billions to trillions of text tokens. DeepSeekBot plays a crucial role in gathering this vast training data.

Privacy and Data Usage Considerations

When DeepSeekBot crawls your site, your content becomes part of DeepSeek’s training data, raising important data usage and privacy questions. Publicly accessible web content is generally fair game for crawling under most legal frameworks. However, not all website owners are comfortable with AI companies using their content. Training data integrates into AI models in complex ways. Although the models learn patterns without storing exact copies, they can sometimes generate text resembling training data. This poses potential copyright and attribution concerns. For businesses with proprietary content, allowing AI crawlers may indirectly benefit competing AI products. For content creators, AI models might generate similar content without attribution. Some jurisdictions are developing AI training data regulations. The EU’s AI Act and US copyright lawsuits could eventually restrict AI companies’ web content collection. For now, website owners must proactively block crawlers to opt out. DeepSeek’s privacy policy and data handling practices are crucial for sites with user-generated content or personal information. Even if data is public, scraping it for AI training might conflict with user expectations or terms of service. Website owners should review their privacy policies to ensure coverage of third-party crawling and AI training scenarios.

Impact on Web Infrastructure

AI crawlers like DeepSeekBot impact website operators. Each request consumes server resources and bandwidth, and aggressive crawling can significantly increase hosting bills, especially for smaller sites. Some website owners report DeepSeekBot making numerous requests quickly, potentially slowing sites or triggering rate limits. Large sites with robust infrastructure manage this better than small blogs. The cumulative effect of AI crawlers is substantial, with a single site potentially being crawled by GPTBot, DeepSeekBot, Claude-Web, Bytespider, and others simultaneously. This scenario creates a tragedy of the commons where individual AI companies benefit from crawling, but the collective burden falls on site operators. Content delivery networks and caching help mitigate some impact. Cached content serves crawlers without hitting origin servers for every request, but this only works if crawlers respect caching headers. Web developers can implement rate limiting specifically for known AI crawlers, allowing some crawling while preventing resource exhaustion. Balancing accessibility (allowing crawler access) with infrastructure protection (preventing abuse) is challenging.

Conclusion

DeepSeekBot is DeepSeek’s web crawler designed to collect training data for their AI models. Like similar crawlers from OpenAI, Anthropic, and Google, it scans publicly accessible websites to build datasets. The bot identifies itself through a specific user-agent string that website owners can detect in their logs. DeepSeekBot exists because modern AI models require enormous amounts of text data to train effectively. Web crawling provides a scalable means of gathering this data from across the internet. Website owners uninterested in having their content used for AI training can block DeepSeekBot through robots.txt files, server configurations, or firewall rules. The Chinese AI crawler operates similarly to its Western counterparts but with less public documentation about its policies. As DeepSeek continues to grow and compete in the AI market, DeepSeekBot will likely remain active on the web. Understanding how it works and controlling its access gives website operators the tools to make informed decisions about their content.

Frequently Asked Questions

How does DeepSeekBot collect data?

DeepSeekBot collects data by crawling the web and scanning publicly accessible pages. It visits sites, downloads HTML content, extracts the readable text, and stores it for training DeepSeek's AI models. This automated process allows DeepSeek to gather large amounts of diverse text efficiently.

What can website owners do if they want to prevent DeepSeekBot from crawling their site?

Website owners can prevent DeepSeekBot from crawling their sites by updating their robots.txt file to include directives to disallow the crawler. They can also implement server-level blocks or use firewalls to return error codes when DeepSeekBot is detected. Each method ensures that the bot doesn't access specific parts or the entirety of the website.

Are there any legal concerns associated with DeepSeekBot's web crawling?

Yes, there are legal considerations surrounding web crawling, particularly regarding copyright and data use. While publicly accessible content is generally fair game, some website owners may have concerns about how their content is utilized in AI training. As regulations evolve, especially in areas like the EU and US, compliance will be increasingly important.

What is the significance of the user-agent string for DeepSeekBot?

The user-agent string identifies DeepSeekBot to web servers, distinguishing it from regular user traffic. This identification allows website owners to track its activity in server logs and take action, such as blocking it if desired. The string provides transparency about the bot's activity and origin, which is crucial for web management.

How does DeepSeekBot compare to other AI crawlers?

DeepSeekBot shares similarities with other AI crawlers, such as those operated by OpenAI and Google, primarily in purpose and function. However, it differs in the level of transparency regarding its crawling policies, as some owners report more aggressive crawling behavior. Each AI crawler is managed by its respective company and may have different configurations and compliance with robots.txt.

What impact does DeepSeekBot have on website performance?

DeepSeekBot can impact website performance, particularly for smaller sites, by consuming server resources and bandwidth. Aggressive crawling may slow down website response times or increase hosting costs due to higher traffic levels. Implementing caching and rate limiting can help mitigate these effects while allowing the crawler to access content.

What resources are necessary to effectively manage DeepSeekBot's crawling?

To effectively manage DeepSeekBot, website owners should maintain their robots.txt file, which requires regular updates as site content changes. Knowledge of server configurations for blocking requests and monitoring access logs is also beneficial. Utilizing CDNs and caching services can further optimize resource use and manage crawler requests without straining the hosting infrastructure.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.