Understanding PanguBot: Huawei's AI Crawler Explained

Complete guide to PanguBot, Huawei's AI crawler for PanGu model training. Learn its purpose, user-agent details, and how to block it.

What is PanguBot and Why Does It Matter

PanguBot is Huawei’s specialized web crawler designed to collect training data for the Huawei PanGu large language models. As a critical tool in AI development, it systematically scans websites across the internet to gather text content that feeds into Huawei’s AI. Similar to how OpenAI uses GPTBot or Google uses GoogleBot, PanguBot is Huawei’s AI data collection tool for advancing its AI capabilities, including the development of models like PanGu-Σ.

Web crawlers like PanguBot are essential because large language models need massive amounts of text data to learn language patterns and generate human-like responses, as seen in the development of models like DeepSeek-V3.2-Exp. These bots automatically browse websites, extract content, and store it for AI training purposes. For website owners and developers, understanding which bots like PanguBot are accessing your content is crucial, as it allows you to control whether your data is used for AI training. PanguBot specifically targets content to improve the PanGu models, Huawei’s answer to models like GPT-4 or Claude, as part of their efforts to enhance AI capabilities. It operates alongside PetalBot, another Huawei crawler focused on search engine indexing, contributing to Huawei’s AI and search ecosystem.

The Connection Between PanguBot and Huawei’s PanGu Models

Huawei developed the PanGu series as its flagship large language models, competing directly with advanced AI systems like ChatGPT and Claude. These models come in different versions optimized for tasks including natural language processing, code generation, and multimodal understanding.

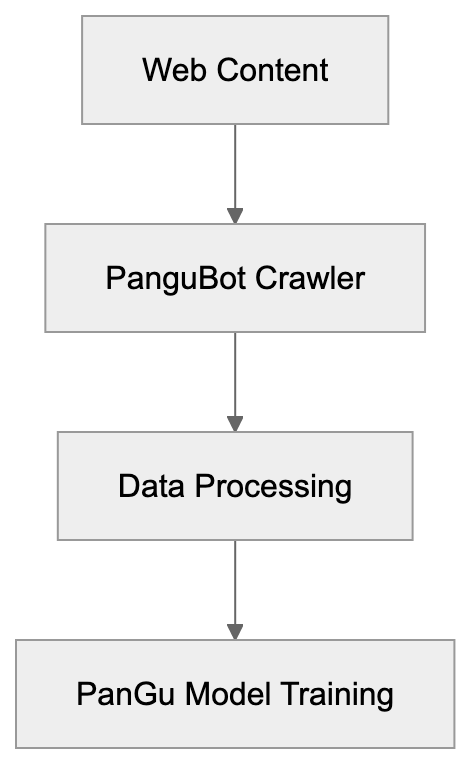

PanguBot Data Collection Flow:

PanguBot acts as the primary AI training bot for collecting data to train these models. Continuous access to fresh web content is vital; without it, the PanGu models risk becoming outdated and less effective. The crawler identifies publicly accessible text content, downloads it, and processes it into training datasets. This ongoing process is crucial as Huawei works to enhance and update its AI models.

Here’s how it works: PanguBot crawls the web, collects text data, sends it to Huawei’s processing systems, and eventually, that data becomes part of the training corpus for PanGu models. This creates a feedback loop where improved models help identify more useful training data, leading to even better models. By allowing PanguBot access, website owners contribute to Huawei’s AI development, knowingly or not.

How to Identify PanguBot Visiting Your Website

PanguBot vs PetalBot Purpose:

PanguBot identifies itself through its unique user-agent string when accessing websites:

Mozilla/5.0 (compatible; PanguBot/1.0; +https://bots.pangu.huawei.com/robots)

This string indicates the request comes from PanguBot version 1.0 and includes a reference URL for more information about the crawler. To confirm PanguBot visits, check your server logs or analytics data for this specific user-agent pattern.

Generally, the bot operates from IP addresses linked with Huawei’s infrastructure, but the precise IP ranges may change as Huawei scales its crawling activities. Most web analytics tools will categorize PanguBot as a bot, ensuring it doesn’t skew your site traffic statistics or user behavior data.

Website administrators can monitor PanguBot activity by reviewing access logs, setting up specific tracking for the user-agent string, or using analytics platforms categorizing bot traffic separately. Understanding when and how often PanguBot visits aids in deciding whether to allow or block its access.

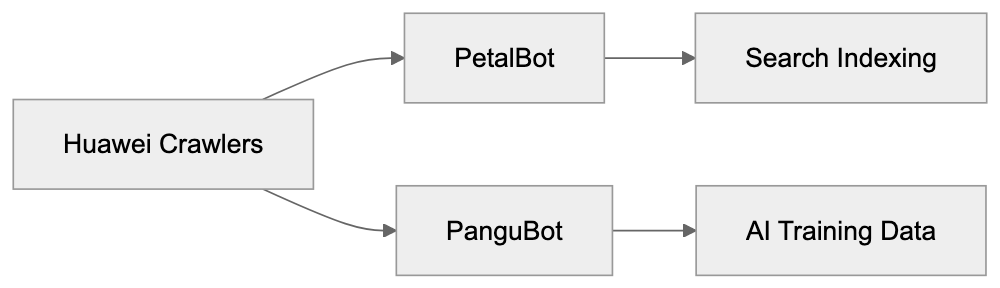

PanguBot vs PetalBot: Understanding the Difference

Huawei operates two main web crawlers serving distinct purposes. PetalBot is Huawei’s general-purpose search crawler, akin to Googlebot or Bingbot, indexing web content for Huawei’s search services and the Petal Search app. In contrast, PanguBot focuses on collecting training data for Huawei AI models.

The key distinction lies in their end use: PetalBot helps users discover websites through search results, whereas PanguBot harvests website content to train large language models capable of generating text, answering queries, and performing other AI tasks. Both crawlers respect robots.txt directives and can be managed independently.

Website owners may choose to allow PetalBot while blocking PanguBot, or vice versa. Allowing PetalBot might increase visibility in Huawei’s search ecosystem, especially in certain markets. Blocking PanguBot ensures your content isn’t utilized for AI training, not affecting search indexing. The two bots operate independently, though they originate from the same company.

Some websites may encounter both crawlers, while others only see one or none. Crawling frequency and depth depend on factors like website size, update frequency, and content type. Neither bot is known to be particularly aggressive, compared to other major crawlers.

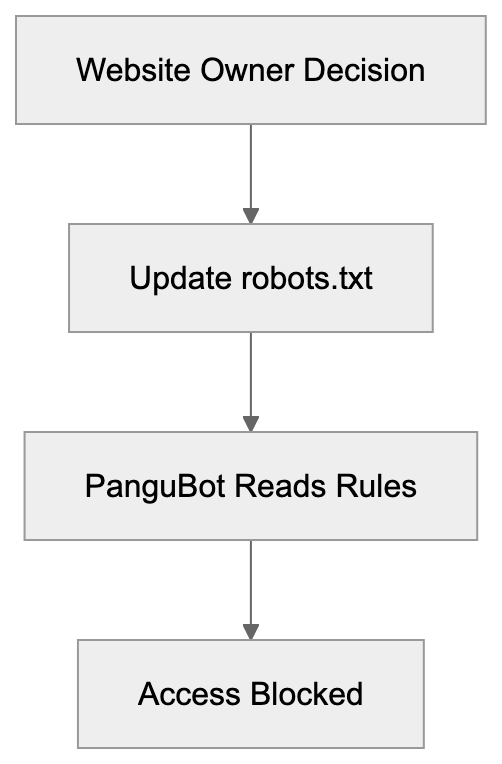

How to Block PanguBot from Your Website

Website owners have various options for preventing PanguBot from accessing their content, with the most common method using the robots.txt file. This file resides in your website’s root directory and instructs crawlers on accessible areas of your site.

To block PanguBot entirely, include these lines in your robots.txt file:

User-agent: PanguBot

Disallow: /This directive tells PanguBot it cannot crawl any part of your website. The bot should respect this rule and desist from attempting to access your content. Most legitimate crawlers, including PanguBot, adhere to robots.txt rules, although compliance is voluntary and not legally enforced universally.

For more nuanced control, you can block specific directories while allowing others:

User-agent: PanguBot

Disallow: /private/

Disallow: /user-content/

Allow: /public/This approach protects sensitive or user-generated content while allowing access to general information pages. Blocks can also be implemented at the server level through .htaccess files (for Apache servers) or Nginx configuration files. These methods check the user-agent string and return a 403 Forbidden or 404 Not Found response when PanguBot attempts access.

Some content management systems and security plugins offer options to block specific bots without manual configuration file edits. Refer to your CMS settings or security plugin documentation for these features.

Blocking PanguBot Implementation:

Comparing PanguBot to Other AI Training Crawlers

Many companies operate AI training crawlers with varying characteristics and purposes. Here’s how PanguBot compares:

| Crawler | Company | Primary Purpose | Robots.txt Support | Known Since |

|---|---|---|---|---|

| PanguBot | Huawei | PanGu model training | Yes | 2023 |

| GPTBot | OpenAI | GPT model training | Yes | 2023 |

| CCBot | Common Crawl | Public dataset creation | Yes | 2011 |

| Claude-Web | Anthropic | Claude model training | Yes | 2023 |

| Google-Extended | Gemini/Bard training | Yes | 2023 |

All these crawlers support robots.txt directives, enabling website owners to block them if desired. GPTBot and Claude-Web emerged around the same time as PanguBot, reflecting the industry’s rush to gather training data for large language models. CCBot has operated longer and contributes to the Common Crawl dataset used by many AI researchers.

Crawling behavior varies: some bots visit frequently, while others take a lighter approach. PanguBot appears to fall in the middle range based on reported server load impacts. None of these bots execute JavaScript or interact with dynamic content like browser-based crawlers.

Website owners concerned about AI training can block all these bots individually via robots.txt or employ a blanket approach blocking entire categories of AI crawlers. The decision depends on your preference for content exposure in AI development and the companies you trust with that data.

Business and Privacy Considerations

Companies and website owners face important decisions regarding AI training bots. Blocking PanguBot and similar crawlers prevents your content from becoming part of AI training datasets. This is crucial if you publish proprietary information, original research, creative works, or user-generated content you want to protect.

Conversely, allowing these bots might increase the visibility of your ideas and information in AI-generated responses. When large language models train on your content, they may reference or synthesize that information in related user queries, providing indirect exposure without direct attribution or links back to your site.

For small business owners, the decision often involves weighing content protection against potential reach. E-commerce sites might block these bots to prevent product descriptions from being used in competing AI tools. News publishers face similar concerns about original reporting being absorbed without proper compensation or credit.

Developers and technical teams should implement blocking decisions in line with company policy, typically by updating robots.txt files, monitoring crawler access patterns, and reviewing which bots are accessing your infrastructure. Marketing professionals and SEO experts must recognize that blocking AI training bots doesn’t affect traditional search engine indexing, as different crawlers are used.

Content marketers should deliberate on whether their strategy includes or excludes AI training purposes. Some organizations view contributing to AI training as part of being a good internet citizen, while others see it as giving away valuable intellectual property without compensation. Neither position is inherently wrong; it depends on your specific situation and values.

The Future of AI Crawlers and Data Collection

The number of AI training crawlers continues to grow as more companies develop large language models. Huawei’s PanguBot is one example in a proliferating data collection ecosystem. Website owners can expect more of these crawlers as AI development accelerates globally.

Regulatory frameworks surrounding AI training data are still evolving. Some jurisdictions are considering laws requiring explicit permission before using web content for AI training, while others adopt a more permissive approach, treating publicly accessible content as fair game. These legal developments will likely impact how crawlers like PanguBot operate in the future.

Technical standards for crawler identification and control continue to progress. The robots.txt protocol remains the primary mechanism, yet discussions about more sophisticated permission systems are ongoing. Proposals include machine-readable licenses specifying exact content usage, including AI training allowances.

Website administrators should stay informed about new crawlers entering the space and update their blocking rules accordingly. Maintaining an up-to-date robots.txt file reflecting your content usage preferences becomes increasingly vital as AI training becomes more widespread. Regular audits of server logs help identify new or unknown crawlers attempting content access.

End

PanguBot serves as Huawei’s dedicated crawler for collecting training data for their PanGu large language models. It operates similarly to other AI training bots from companies like OpenAI and Anthropic, systematically gathering web content to enhance AI capabilities. Website owners can recognize PanguBot through its specific user-agent string and manage its access via robots.txt directives or server-level blocks. Deciding whether to allow or block PanguBot hinges on preferences concerning content protection and AI training contribution. Understanding the difference between PanguBot and PetalBot aids in making informed choices about permitting Huawei crawlers. As AI development continues expanding, managing crawler access becomes essential for website administration and content strategy. Regular monitoring and updating of blocking rules ensure your content is utilized only in approved ways.

Frequently Asked Questions

How can I check if PanguBot is visiting my website?

You can identify PanguBot visits by looking at your server logs or analytics data for its unique user-agent string: `Mozilla/5.0 (compatible; PanguBot/1.0; +https://bots.pangu.huawei.com/robots)`. Most web analytics platforms categorize it as a bot, so it shouldn't affect your overall traffic stats.

What should I do if I want to block PanguBot from accessing my site?

To block PanguBot, you must edit your robots.txt file by including the directive `User-agent: PanguBot` followed by `Disallow: /`. This rule instructs PanguBot not to crawl any part of your site. You can also manage access to specific directories.

What is the difference between PanguBot and PetalBot?

PanguBot is specifically designed for gathering training data for Huawei’s PanGu models, while PetalBot is a general-purpose search crawler that indexes web content for Huawei's search services. They serve different purposes even though both are developed by Huawei.

Can blocking PanguBot affect my website's visibility in search results?

Yes, blocking PanguBot will prevent your content from being used in AI training but won't impact your site’s indexing by PetalBot or other search engines. You may maintain visibility in Huawei's search ecosystem by allowing PetalBot while blocking PanguBot.

What are the implications of allowing PanguBot access to my content?

Allowing PanguBot access might increase visibility for your ideas as they could be referenced in AI responses, but it also means your content could be used for AI training without attribution. It's essential to weigh the potential benefits of increased exposure against concerns over control of your original material.

How often do crawlers like PanguBot access websites?

Crawling frequency can vary based on factors such as your website's size, how frequently it's updated, and the type of content it has. Generally, PanguBot is reported to have a moderate crawling frequency and doesn't tend to be overly aggressive.

Are there legal regulations for AI training crawlers like PanguBot?

Regulations around AI training data are still developing. Some jurisdictions may require explicit permission for content use, while others may treat publicly accessible content as fair game. Keeping abreast of these legal changes is essential for website owners who wish to manage crawler access effectively.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.