Understanding the SecurityTrails Security Research Crawler

Learn about the SecurityTrails bot: its purpose, features, and applications in DNS, domain, and IP intelligence.

What is the SecurityTrails Crawler

The SecurityTrails crawler is a specialized web crawler designed for security research and intelligence gathering. It focuses on collecting DNS intelligence, domain details, and IP intelligence across the internet, providing comprehensive data for security analysis. Operating as part of SecurityTrails’ infrastructure, it builds comprehensive databases of domain and network information, enabling detailed threat assessments. Security researchers, penetration testers, and IT professionals use it to map attack surfaces and uncover potential vulnerabilities, enhancing proactive security measures. The SecurityTrails bot continuously scans web properties to update records of DNS changes, SSL certificates, and domain configurations, ensuring up-to-date security intelligence. This data is crucial for threat intelligence, conducting security audits, and infrastructure monitoring. Unlike general web crawlers that index content for search engines, security research bots target technical metadata and network configuration. Companies leverage this domain intelligence to protect assets, monitor brand abuse, and track infrastructure changes across competitors or threat actors.

Why Security Research Crawlers Exist

Security research crawlers like the SecurityTrails crawler are vital in modern cybersecurity. Organizations need to understand their external attack surface and monitor changes to their digital footprint. Manual tracking of DNS records is impractical due to frequent changes, so the SecurityTrails crawler automates the collection of domain registration data, nameserver configurations, and historical DNS records. This helps security teams detect unauthorized changes, identify shadow IT, and discover forgotten subdomains that may be vulnerable. It also monitors SSL certificates to reveal new services or expired security credentials. Without automated security research bots, keeping accurate inventories of internet-facing assets would require massive manual effort. Security researchers use this data to investigate malicious infrastructure, track phishing campaigns, and analyze malware command and control servers. Continuous operation is necessary as DNS and domain data change 24/7 across millions of domains.

How SecurityTrails Crawler Operates

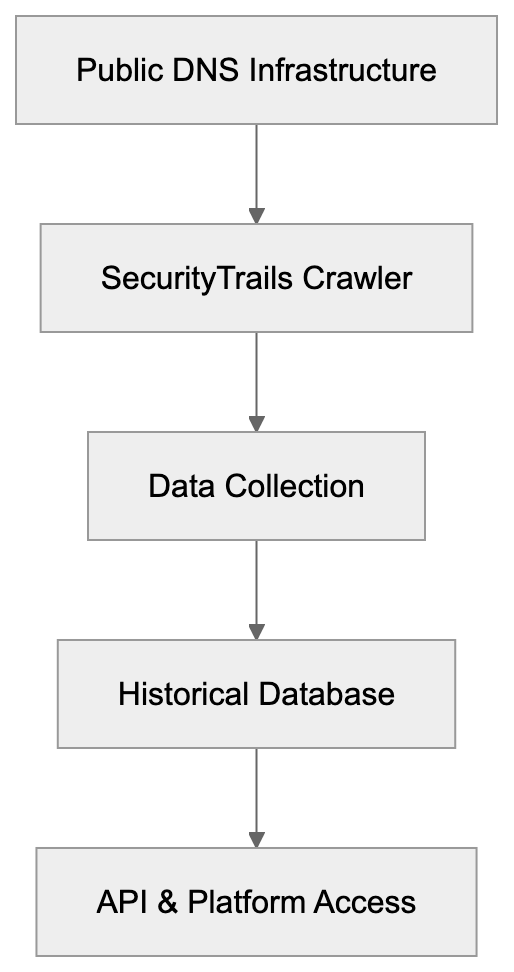

SecurityTrails Crawler Operation Flow:

The SecurityTrails bot identifies itself through its user-agent string, which includes “SecurityTrails” for easy recognition in server logs. It accesses publicly available DNS records, WHOIS information, and SSL certificate data without needing authentication. Regular scans detect changes in A records, MX records, TXT records, and other DNS entry types. Respecting robots.txt files, it includes contact information in its user-agent for webmasters. The focus is on technical metadata rather than website content or user data. Operating from multiple IP addresses distributes load and maintains reliability. Scan frequency varies, with high-value domains checked daily and others weekly or monthly. The collected data is available through the SecurityTrails API and web interface, providing current and historical domain intelligence.

Key Features and Capabilities

SecurityTrails crawler offers distinct capabilities for security bot intelligence:

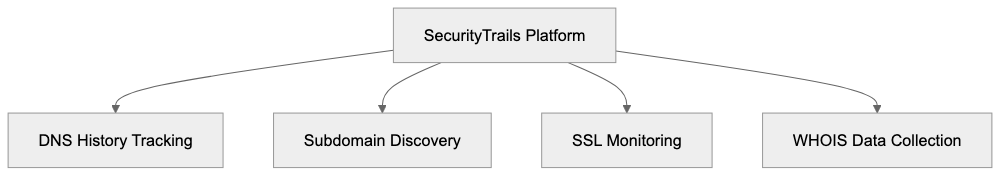

- Maintains historical DNS records for millions of domains spanning several years.

- Enables subdomain discovery, revealing an organization’s complete domain infrastructure.

- Monitors SSL certificates for issuance, expiration, and certificate authority details.

- Collects WHOIS data like registrar information, registration dates, and, where available, contact details.

- Links domains to hosting providers and identifies shared hosting relationships through IP intelligence.

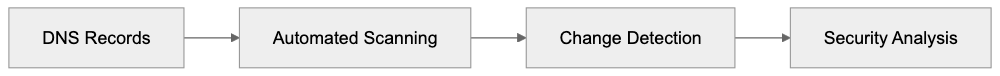

DNS Intelligence Data Collection Process:

- Detects DNS changes in near real-time.

SecurityTrails aggregates this data to illustrate relationships between domains, IP addresses, and infrastructure patterns. The crawler can identify typosquatting and brand abuse by analyzing similar domain registrations, and it tracks open ports and services on discovered IP addresses. All information is searchable through the SecurityTrails platform, aiding rapid security incident investigation and infrastructure research.

Blocking or Managing the SecurityTrails Bot

Webmasters can manage SecurityTrails crawler access through several methods. The simplest method is using robots.txt directives to block the bot by adding a specific user-agent block for SecurityTrails. However, given the crawler mainly collects DNS data instead of web content, robots.txt is less effective. More robust blocking requires firewall rules to deny requests from known SecurityTrails IP addresses. The company doesn’t publish a complete list of these IPs, making this challenging. Some organizations permit the crawler as it collects publicly available DNS information. Rate limiting can mitigate performance impact if necessary, but for complete privacy, combine IP blocking, geo-restrictions, and non-standard DNS configurations. Note that blocking the crawler doesn’t prevent public DNS record collection, as these can be directly queried from authoritative nameservers.

SecurityTrails Compared to Alternative Tools

Several services offer similar DNS intelligence and domain research capabilities. Here’s a comparison:

| Tool | Primary Focus | Historical Data | API Access | Pricing Model |

|---|---|---|---|---|

| SecurityTrails | DNS & domain intelligence | Multi-year DNS history | Yes, paid tiers | Freemium with paid plans |

| Shodan | Internet-connected devices | Limited historical data | Yes, paid tiers | Freemium with paid plans |

| Censys | Certificate & host scanning | Certificate transparency logs | Yes, paid tiers | Freemium with paid plans |

| VirusTotal | Malware & URL scanning | File and URL analysis | Yes, free & paid | Free with rate limits |

| RiskIQ | Threat intelligence | Extensive historical data | Enterprise only | Enterprise pricing |

SecurityTrails stands out with its complete DNS historical records and subdomain discovery capabilities. Shodan focuses more on exposed services and IoT devices than DNS infrastructure. Censys excels in certificate transparency and TLS configuration analysis. VirusTotal is primarily a malware scanning service with some domain reputation features. RiskIQ targets enterprise customers with broad threat intelligence beyond DNS data. Security professionals often use multiple tools since each offers unique internet infrastructure perspectives. SecurityTrails is particularly strong in DNS reconnaissance and domain relationship mapping, with continuous scans ensuring fresher data than on-demand queries.

SecurityTrails Key Capabilities:

Use Cases for Security Professionals

Security teams leverage SecurityTrails data for various operations:

- Penetration Testing: Identifying subdomains and potential entry points during security assessments.

- Incident Response: Investigating suspicious domains through DNS history and infrastructure relationships.

- Brand Protection: Monitoring for typosquatting or phishing domains impersonating the organization.

- Threat Intelligence: Tracking malicious infrastructure via DNS patterns and hosting relationships.

- Bug Bounty Hunting: Discovering forgotten or misconfigured subdomains that may be vulnerable.

- Security Operations Centers: Monitoring for unauthorized DNS changes indicating compromise.

- Mergers and Acquisitions: Cataloging technical assets during due diligence processes.

- Research: Mapping threat actor infrastructures by analyzing shared hosting and DNS patterns.

Compliance efforts are also supported, as organizations maintain accurate inventories of internet-facing assets required by regulatory frameworks.

Privacy and Data Collection Considerations

The SecurityTrails crawler collects technically public information, but its aggregation raises privacy questions. DNS records are public to enable internet functionality, so collecting them doesn’t constitute unauthorized access. However, aggregation creates intelligence that casual observation wouldn’t reveal. Organizations with sensitive operations often minimize their DNS footprint. The crawler focuses exclusively on technical infrastructure metadata, avoiding user data, content, or PII. Some privacy advocates express concerns that historical DNS databases enable surveillance capabilities not possible in earlier internet eras. SecurityTrails sells access to this aggregated intelligence as a commercial service, revealing organizational relationships, technology choices, and infrastructure changes that companies might prefer to keep confidential. Despite these concerns, the information is fundamentally public and accessible, though less conveniently.

Technical Implementation Details

The SecurityTrails crawler employs several technical methods to gather comprehensive data:

- Conducts active DNS queries against authoritative nameservers.

- Monitors passive DNS data from network sensors and partners.

- Attempts zone transfers where misconfigurations allow.

- Uses certificate transparency logs to discover subdomains and track SSL deployments.

- Performs reverse DNS lookups on IP address ranges to find more domains.

- Analyzes DNS zone files from accessible top-level domains.

- Uses a distributed architecture to manage large-scale scanning.

- Utilizing data normalization to clean and standardize records from diverse sources.

- Balances scan comprehensiveness while respecting server resource limits.

- Machine learning algorithms help identify related infrastructure and DNS pattern insights.

All collected data is stored in databases optimized for fast searching across billions of historical records.

The SecurityTrails security research crawler plays a crucial role in modern cybersecurity infrastructure. Automating the collection of DNS records, domain intelligence, and IP address information is essential for security professionals. The bot operates by continuously scanning public DNS infrastructure and aggregating years of historical data. Security teams use this intelligence for penetration testing, threat hunting, brand protection, and asset management. While the crawler gathers publicly available data, aggregation provides substantial research capabilities. Organizations can block the bot using various measures, though this doesn’t prevent public DNS record collection by other means. SecurityTrails competes with tools like Shodan, Censys, and VirusTotal, maintaining unique strengths in DNS historical analysis and subdomain discovery. Understanding security research crawlers equips both security professionals and website operators to effectively use tools and make informed bot access management decisions.

Frequently Asked Questions

What types of data does the SecurityTrails crawler collect?

The SecurityTrails crawler primarily collects DNS intelligence, which includes historical DNS records, WHOIS data, SSL certificate details, and related network configurations. This data helps security professionals assess their digital infrastructure and identify vulnerabilities.

How frequently does the SecurityTrails crawler update its data?

The crawler updates its data regularly, with high-value domains being scanned daily, while less critical domains may be checked weekly or monthly. This continuous operation ensures that users have access to the most recent information regarding their digital assets.

Can organizations block the SecurityTrails crawler from accessing their data?

Yes, organizations can block the SecurityTrails bot using robots.txt directives or more robust methods such as firewall rules. However, since the crawler collects publicly available data, blocking it does not prevent others from accessing the same information through direct queries.

How does SecurityTrails compare to other DNS intelligence tools?

SecurityTrails stands out due to its extensive historical DNS records and operational capabilities like subdomain discovery. While other tools like Shodan and Censys focus on different aspects of internet infrastructure, SecurityTrails provides a comprehensive solution specifically for DNS reconnaissance and domain relationship mapping.

What are common use cases for the SecurityTrails crawler?

Security professionals use the SecurityTrails data for various purposes, including penetration testing, incident response, brand protection, and threat intelligence. It is particularly useful for identifying vulnerabilities, tracking malicious activities, and ensuring compliance with regulatory frameworks.

Is the information collected by the SecurityTrails crawler private?

The information collected by the SecurityTrails crawler is technically public, as DNS records are required for internet functionality. However, the aggregation of this data can raise privacy concerns, especially for organizations looking to minimize their digital footprint.

What technical methods does the SecurityTrails crawler use to gather data?

The SecurityTrails crawler employs methods such as active DNS querying, passive DNS data monitoring, and zone file analysis to gather comprehensive information. By using a distributed architecture, it efficiently scans and normalizes data from diverse sources while respecting server resource limits.

Track Your AI Visibility

See how AI chatbots like ChatGPT, Claude, and Perplexity discover and recommend your brand.